Hands on with the NVIDIA Jetson Nano

Follow articleHow do you feel about this article? Help us to provide better content for you.

Thank you! Your feedback has been received.

There was a problem submitting your feedback, please try again later.

What do you think of this article?

Compact low-cost module delivers CUDA compute power for AI projects and more.

Artificial intelligence (AI) offers immense potential for use in a seemingly endless list of applications, ranging from self-driving cars to analysing medical images. However, it typically requires a great deal more processing power — orders of magnitude in fact — than, say, a traditional computer vision algorithm might. As such, while providing a great many benefits, this has tended to limit the use of AI due to power, cost and size constraints.

In recent years this has started to change and significant advances have been made with the emergence of dedicated hardware acceleration, plus the application of powerful GPU technology. The NVIDIA Jetson family brings the latter to the embedded space and with the Nano providing the most compact and eminently affordable solution. Thanks to the CUDA platform, the uses for which are by no means limited to graphics and AI applications either.

CUDA 101 The Jetson Software Stack

The Jetson Software Stack

To quote Wikipedia, CUDA (Compute Unified Device Architecture) is a parallel computing platform and application programming interface (API) model created by NVIDIA. What it does it to allow use of a graphics processing unit (GPU) for general purpose applications, aka GPGPU. The reason this is interesting is that, in terms of performance, a modern graphics processor is something which not that long ago would have been regarded as a supercomputer.

However, the thing about supercomputers is that you would rarely, if ever, simply recompile your code and then suddenly realise the full potential of the machine. The same is true with a GPU and it achieves its blistering performance via highly specialised processing units, which you cannot simply boot an operating system on and then run all your existing applications.

This is where CUDA comes in and it provides a set of accelerated libraries that are able to harness the power of an NVIDIA GPU, in addition to extending C/C++ and providing a FORTRAN — which remains popular in high-performance computing (HPC) — implementation. Along with which there are wrappers for numerous other programming languages, plus an ever-growing ecosystem of domain-specific frameworks and applications.

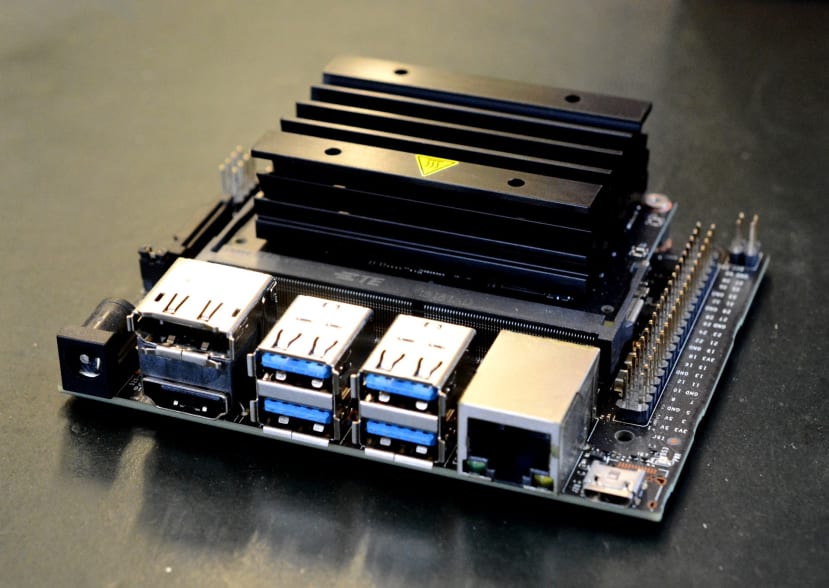

Hardware

The Jetson family has been around for some time and with the TK1 board shipping nearly 6 years ago, NVIDIA has long recognised the potential for GPGPU in embedded applications. The Nano was announced in March 2019 and brought CUDA performance at a far lower price than before and in a much smaller form factor, opening up the technology to many more markets and applications.

So what exactly does the Jetson Nano provide?

- NVIDIA Maxwell™ architecture with 128 NVIDIA CUDA® cores 0.5 TFLOPs (FP16)

- Quad-core ARM® Cortex®-A57 MPCore processor

- 4 GB 64-bit LPDDR4

- 16 GB eMMC 5.1 Flash

- Video encode (250MP/sec) and decode (500MP/sec)

- Camera interface: 12 lanes (3x4 or 4x2) MIPI CSI-2 DPHY 1.1 (18 Gbps)

- HDMI 2.0 or DP1.2 | eDP 1.4 | DSI (1 x2) 2 simultaneous

- 1 x1/2/4 PCIE, 1x USB 3.0, 3x USB 2.0

- 1x SDIO / 2x SPI / 4x I2C / 2x I2S / GPIOs -> I2C, I2S

- 10/100/1000 BASE-T Ethernet

Managing to fit all this into a 69.6x45mm SO-DIMM form factor module.

As you might expect, a Developer Kit (199-9831) is available and this packages the module together with a carrier board that features USB, HDMI, and camera, etc. connectors. This even includes an M.2 slot, which means that there is the option to fit an SSD for high capacity/performance storage, in addition to a Micro SD card slot and the Jetson module onboard 16GB flash.

Plentiful GPIO is provided via a 40-pin header similar to that found on a Raspberry Pi, which is supported via a library that has the same API as the popular RPi.GPIO library. Hence porting applications that use this to integrate simple inputs and outputs should be trivial. In addition to this, the Developer Kit is compatible with the Raspberry Pi Camera (913-2664) .

In short, even if you didn’t make use of the GPU, the Jetson Nano is still a pretty attractive proposition in terms of its base performance and the integrated I/O.

Setting up

NVIDIA provides a getting started guide and this specifies a minimum of a 5V 2A Micro USB power supply, noting that anything but a good quality supply may not actually deliver the current stated and this could lead to problems. Alternatively, the Developer Kit may be powered via a 5.5/2.1mm DC barrel connector and if using this method, a 4A power supply is recommended.

The official Raspberry Pi power supply (187-3416) at 3A should be a good match if powering via Micro USB. If using the barrel connector, a 5V 4A RS Pro desktop power supply (175-3274) should also provide plenty of spare capacity for the attached peripherals. Note that when using the barrel connector a jumper must be fitted across J48.

The guide provides a download link for a Micro SD card image and instructions for writing this out. Once downloaded the file was unzipped and then written out on a Linux PC with:

$ sudo dd if=sd-blob-b01.img of=/dev/mmcblk0 bs=4M status=progress conv=fdatasyncAs ever, caution must be taken when using dd and the of= argument may not be the same with other computers. The conv=fdatasync argument means we want to flush the buffers (sync) before exiting, which saves us from having to enter sudo sync after the dd command completes.

After inserting the Micro SD card and connecting the power supply, a keyboard, monitor, and mouse should be connected. However, it should be noted that DVI monitors and an adapter cable will not work, hence a monitor with a proper HDMI or DisplayPort interface must be used instead. Furthermore, if we want to run any of the live camera recognition demos we will obviously need a camera connected also, such as the Raspberry Pi Camera v2 (913-2664) .

Upon first boot, a setup process is initiated and the keyboard language and timezone, etc. is configured. As part of which a first user account is created, amongst other tasks. It appears that if this process does not successfully complete, upon next boot you will be instructed to complete setup via a terminal session on the UART header. Therefore it’s important to make sure that you have a compatible monitor, along with a USB keyboard and mouse, connected on first boot.

Following the initial setup, a familiar Ubuntu desktop is presented and the Jetson Nano can be used pretty much like any other small computer. An obvious next step is to open a terminal and update all the installed software to the latest versions with:

$ sudo apt update

$ sudo apt dist-upgradeAn SSH server is installed and started by default, so it’s also possible to connect over the network to carry out such tasks.

JetPack

NVIDIA JetPack SDK includes OS images, libraries, APIs, samples, developer tools, and documentation. Along with a suitably configured Linux kernel and NVIDIA drivers, the reference Ubuntu-based OS image that we wrote out earlier comes pre-populated with JetPack and provides the following libraries and examples:

- CUDA

- TensorRT SDK for high-performance deep learning inference

- cuDNN GPU accelerated library for deep neural networks

- Multimedia (MM) API for video encoding and decoding etc.

- VisionWorks OpenVX-based toolkit for computer vision and vision processing

- OpenCV popular library for computer vision, image processing, and machine learning

- Vision Processing Interface (VPI) library

Saving us the trouble of having to install these ourselves and getting us up and running much faster.

Demos

Not only are examples provided, but also a self-paced online training course plus a growing collection of projects from NVIDIA and the community. The latter including Two Days to a Demo, which is an “introductory series of deep learning tutorials for deploying AI and computer vision to the field with NVIDIA Jetson AGX Xavier, Jetson TX2, Jetson TX1, and Jetson Nano” — that as the name suggests, reportedly takes around two days to complete from start to finish. This includes as part of it, “Hello AI World”, a set of deep learning inference demos.

To get the demos set up we need to execute the following commands:

$ sudo apt update

$ sudo apt install git cmake libpython3-dev python3-numpy

$ git clone --recursive https://github.com/dusty-nv/jetson-inference

$ cd jetson-inference

$ mkdir build

$ cd build

$ cmake ../

$ make

$ sudo make install

$ sudo ldconfigIn the process of which we will be prompted to select which pre-trained neural networks we would like to download.

We’ll also be prompted to install PyTorch, which is needed if we wanted to run a demo that involves transfer learning, where an already trained model is used as the starting point on a model for another task. Additional models and PyTorch can both be installed later on if need be and for details, see the documentation in the GitHub repo.

So now finally on to running a demo!

Hello AI World is structured roughly by the following categories:

- Classifying images with ImageNet

- Locating objects with DetectNet

- Semantic Segmentation with SegNet

- Transfer Learning with PyTorch

The first of these is image recognition and here we are looking to identify scenes and objects. With the second we go a step further and locate objects within the frame. While semantic segmentation involves classification at the pixel level, which is particularly useful for environmental perception, as might be used with a driverless car. Transfer learning we mentioned briefly earlier.

We’ll take a look at a demo in the first category, classifying images. This is then split into two parts: a console program, where you feed a network still images, and another where it is provided with a live video stream as input. We’ll run the latter and use a Pi Camera for video.

To execute this with the default options, we simply enter at the terminal:

$ imagenet-cameraAfter a short delay, we should then see a video window appear, which has text at the top of the frame with the classified object/scene and a confidence figure.

By default, the GoogleNet convolutional neural network is used and this has been trained on the ImageNet dataset, which contains 1,000 classes of object. As we can see, when the scene is perhaps unclear and not something it has been trained to recognise, the output jumps around with many classifications of low confidence. However, once an object it has been trained to recognise is at the forefront in the scene, it does a very good job of identifying this.

Note that we could also specify alternate networks using the “--network” argument, such as AlexNet, ResNet-152, and Inception-v4, all of which are provided as pre-trained models. In addition, these may be trained — from scratch or via transfer learning — using custom datasets which are a much better fit for a given application. For example, this might be a network which has been trained to classify quality control pass and fail in a factory environment, or perhaps some potentially hazardous situation in the workplace.

The important thing to note here is how fast the neural network is making inferences and with this peaking at in excess of 70 frames per second (FPS), this is pretty fast. Of course, performance will vary with different networks, but this is nonetheless impressive.

Speaking of performance, a TegraStats utility is available which provides CPU utilisation and thermal, etc. information. This can be run with:

$ tegrastatsIn the above screenshot, we can see the CPU at idle initially and then roughly halfway down the imagenet-camera application is started, at which point the CPU utilisation spikes as the network is loaded and the video stream starts, subsequent to which it then starts to settle down.

Wrapping up

The Jetson Nano packs an awful lot into a small form factor and brings AI and more to embedded applications where it previously might not have been practical. Here we have barely scratched the surface and simply run one of the more obvious neural network inference demos, but there are plenty more examples and learning resources provided by NVIDIA and the wider community.

Comments