Classical Automation enters Alien World of IT: Part 3

Follow articleHow do you feel about this article? Help us to provide better content for you.

Thank you! Your feedback has been received.

There was a problem submitting your feedback, please try again later.

What do you think of this article?

Outside of industry people often use the terms „safety“ and „security“ interchangeably. So, first of all, let me explain how the automation industry understands them:

Safety is a condition which you can establish by protection from unintentional harm. Such harm is caused by acts which do not plan the non-desirable outcome. A car’s airbag is a perfect example of a safety device. It protects the driver from being flung against the windscreen when the vehicle crashes suddenly into an obstacle. Except for suicide, this situation is not planned by the driver. So protecting him from death is a means of safety.

On the other hand, security is the condition you get by protection from intended harm. Such harm is caused by acts of purposive intrusion, abuse or destruction. A car’s immobiliser system is a perfect example of a security device. It protects the owner from losing his car by burglary.

You could shortly say “safety protects from accident whereas security protects from offenders”. Let’s see what this traditionally means in a production plant:

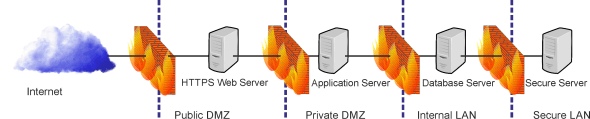

Security has been the territory of the plant protective service, and it often is a very sovereign territory. Their task is to prevent people from entering certain places, smuggling things in or out, or tampering with plant property. Today, the most critical field of plant protection is IT security and therefore, IT departments do often work closely together with plant protective force. Intrusion, abuse or destruction can be done much more effectively and remotely by accessing the plant’s computer networks. Connecting the plant’s networks to the internet results in new challenges of “cybersecurity”. So-called “Firewalls” need to block unwanted data traffic from crossing the plant’s boundaries. Establishing zones of security (like “demilitarised zones” = DMZ) with firewalls between them further enhances this principle of encapsulation. By the way, protecting your house from burglary might be similar: You need to get good separation from the outside world by secure doors and windows. High values need further protection by encapsulating them in a “deeper shell” like a safe (which is b.t.w. NOT a safety device but a security device ;-)

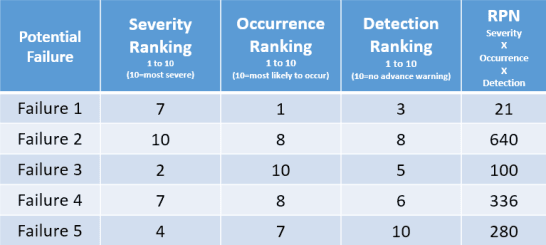

Safety in a plant has been the traditional territory of automation engineers and the Health and Safty department (often also a very sovereign territory). Especially “functional safety” is sophisticated and needs trained engineers (e.g. CSME). The typical workflow of establishing safety starts with analysing potential failures. Methods like FMEA (failure mode and effects analysis) or FTA (fault tree analysis) assist the safety engineers by finding possible risks of failure or accidents. Scoring systems like the “RPN” (Risk Priority Number) do help by evaluating the risk. If you start by listing the unwanted (harmful) conditions of your system, you try then to make a list of all possible causes for these conditions (=failures), how often they might occur and how you possibly could detect them. The next step then is to plan system reactions or means to anticipate the reasons – with other words; you plan provisions to prevent failure.

Typically you would add equipment to your system (e.g. to make certain functions redundant) or use selected “safety certified” equipment which has defined low failure rates. There are for example “safe” versions of field busses like Profibus (called ProfiSafe). Master and Slave (see part 2) do cyclically exchange safety-relevant data in special telegrams. Corrupt telegrams can be detected because the data is checked by high redundancy (CRC). An additional Watchdog inside the safety device (slave) fires and sets the device into a “safe state” as soon as there is no valid telegram received from the master during a defined short period.

Introducing any new procedures or components into an established safe system would always require an entirely new safety analysis and certification. Therefore safe systems tend to be operated according to the principle “never touch a running system”. Secure systems, on the other hand, tend to be conducted according to the demand “Change your secrets quicker than the intruder hacks them”. These seem to be potentially conflicting strategies. But in reality, both territories have found ways to deal with reality:

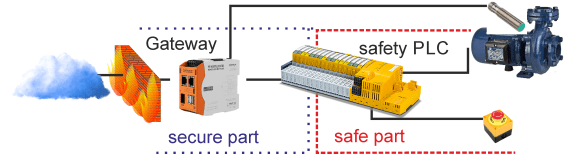

In automation, engineers try to decouple (capsule) safe system components from “unsafe” (i.e. not approved for safe operation) parts. So you can add and change parts of a system as long as you do not introduce new safety risks and as long they do not disturb safe parts from preventing harm. For example, if you protect workers from touching rotating parts by stopping the rotation as soon as the lid of a protective enclosure is opened, you could change anything out- or inside the cabinet which has nothing to do with the safety functionality. Changing the enclosure, the sensors which detect the opening of the enclosure, the electronics which stop the rotation or even the drive which causes the rotation could change factors of the risk and failure analysis. Therefore these parts are part of the safety concept. But adding a sensor which communicates the state into the cloud would of cause not affect the safety preventing workers from touching rotating parts.

In security technology, computer engineers needed to find a secure way to pass system borders and establish secure communication between distributed systems even over the internet. Encryption has been the solution to this problem ever since. Instead of sending postcards out of your house, you send sealed envelopes with encoded information. Decoding it requires the possession of a pre-negotiated key. Of course, circumventing this encryption is always an aim of intruders. Therefore security constantly needs to enhance encryption methods. But encryption is much more stable than the rest of an internet-based system. Internet protocols and standard tools, respectively, languages like HTML, Java, etc. tend to suffer from vulnerability. They often provide unplanned back doors, and it has become hackers’ daily sport to find these back doors. So updating these components regularly with new versions with removed known risks is essential. Any system connected to the internet without possibilities for a security update would be an ideal victim for offenders.

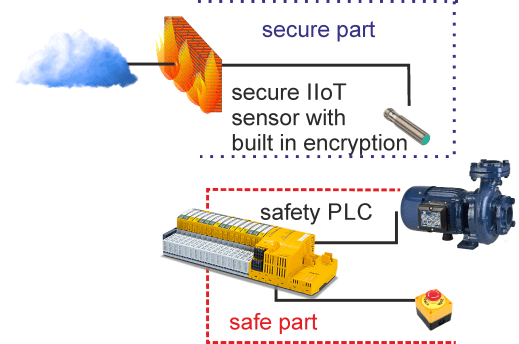

So how to deal with these facts when it comes to IIoT? Separation, encryption and OTA (over the air updates) are efficient means to combine safety and security in the automation industry.

Separation can be done by connecting the control systems with their internal busses for M2M communication to a gateway module. This module strictly separates data traffic on the automation side from data traffic over the internet. It is never part of a safety concept and therefore can and must have an updatable firmware. Such updates could seldom be necessary (e.g. in case of updated encryption methods) or more frequently, depending on the amount of interoperability. E.g., if the gateway does have an integrated web server, there could be many yet unknown ways to attack the system which would need elimination as soon as they get known.

But instead of using a dedicated gateway (which is in some ways similar to the principles of a firewall) you could also separate things by using additional specialised sensors which do directly communicate over the internet. Anyway, in both scenarios, you do need encryption and means of authentication to establish cybersecurity. Cloud services like AWS or Azure (to name just the two most used systems) do offer an extensive toolbox for authentication and encryption at the server side. But when it comes to the sensors or gateways, we exit from the IT world and enter the embedded world. Systems have fewer resources and CPU power and often struggle with the enormous computing power needed for encryption processes. But the chip industry has realised this need and does often provide encryption co-processors on their SoCs (System on a Chip), or you can use special “Crypto-Chips” for your system design. The biggest obstacle for applying encryption and authentication methods in embedded systems is the lack of trained hard- and software engineers who are both familiar with embedded programming techniques and IT security methods. On the other hand, I often have talked to trained IT programmers who could not understand the difficulties to extract data from an industrial control system or even a safety system. They tend to frown on “never touch a running system”, instead of respecting that this is a long-approved method to protect the asset which often runs for decades and not just two years like a smartphone or server hardware.

IIoT urgently needs a new culture of cooperation and co-education between IT and automation engineers. Both aspects are essential for I4.0, and therefore, engineers should know and respect the history from where they come and learn from each other. Because the concepts are so different, it is hard for an automation engineer to enter the world of cybersecurity, but it is possible. Companies should encourage their embedded programmers to improve their security skills. IT security experts should try to adapt their language to a beginner’s level when talking to embedded engineers. Do not merely presume they would know what “private key” or “certificate” means. Be kind and explain instead of complaining.

In our last part, coming soon, I will talk about open-source, and why we should not fear to share intellectual property when automation meets IIoT.

Next Part 4