Revisiting the INMOS Transputer

Follow articleHow do you feel about this article? Help us to provide better content for you.

Thank you! Your feedback has been received.

There was a problem submitting your feedback, please try again later.

What do you think of this article?

A look back at the pioneering British microprocessor, its impact and legacy.

The INMOS Transputer was positioned to revolutionise computing and for a period in the 1980s, it delivered on this promise, finding use in many and diverse advanced applications, ranging from digital signal processing for telecommunications networks and synthetic aperture radar (SAR), through to high-performance desktop computing and massively parallel supercomputers.

Sadly, by the early 1990s things were not looking good for the transputer; INMOS, the once state-owned semiconductor company, had been sold on to Thorn EMI and then subsequently to SGS Thomson, with long delays in the introduction of the latest generation microprocessor resulting in the transputer losing its edge and being overtaken by arguably less elegant architectures. It wasn’t long then before the transputer was all but abandoned, albeit not without having made its mark.

So what made the transputer special?

Key Features

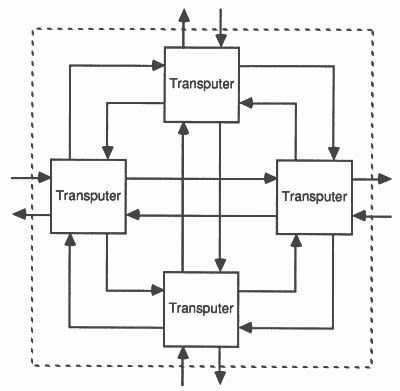

Transputer is a portmanteau of transistor and computer, with the name chosen to reflect the fact that they are building blocks which may be connected together to create something more powerful, much as transistors are in order to build a computer. This did not come without practical limits, but impressively large and powerful for the time systems could be constructed this way.

A four handled promotional mug marketing the T9000 transputer.

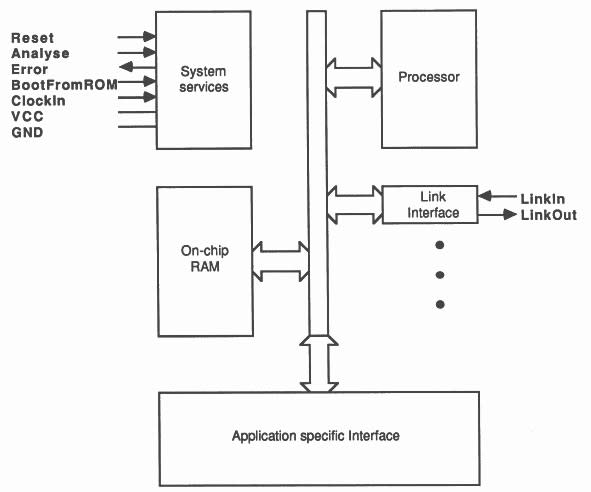

Four serial links operating at 4, 10 or 20Mbit/s are a defining characteristic of the transputer — which may not sound fast today, but it’s important to remember that this was at a time when the microprocessor clock speeds were counted in the low tens of megahertz. These “os-links” enabled scaling by building networks of transputers, whereby chips were able to relay messages on to their neighbours. In addition, to which, the links could be used to boot a transputer, instead of booting from memory, which meant fewer ROMs were required and systems were much more flexible.

Other key features include a microcontroller-like design, with a small amount of onboard RAM and an integrated memory controller. Although not RISC, the CPU architecture is relatively simple, with additional circuitry dedicated to scheduling traffic over os-links and providing support for priority levels which improved real-time and multiprocessor performance. The same logical system is also used to implement virtual network links in memory, which enables program scheduling within the transputer, without the need for an operating system.

Family

The transputer family went to market via three distinct groups:

- T2. 16-bit.

- T4. 32-bit.

- T8. 32-bit with 64-bit IEEE 754 floating-point.

The 16-bit T212 transputer was launched with parts featuring 2KB RAM and a clock speed of either 17.5MHz or 20MHz, with the T222 expanding the on-chip RAM to 4KB, and the T225 in addition featuring debugging breakpoint support.

The 32-bit T414 had 2KB RAM and with a 15MHz or 20MHz clock speed. The T425 increased RAM to 4KB and added breakpoint support, along with extra instructions.

The T800 featured a further extended instruction set and most importantly added a 64-bit FPU.

However, this shouldn’t have been where the story ended.

A T9000 HTRAM fitted in a prototype system from Parsys.

The T9000 was built on the T8 series and featured many improvements, such as a 16KB cache, 5-stage pipeline, upgraded 100MHz link system, a new packet-based link protocol, and Virtual Channel Processor (VCP) hardware — which promised far greater flexibility, as programs did not need to be aware of physical network topology. However, the T9000 suffered significant delays, sufficient funding was not available and it did not meet performance goals. This spelled the end of the T9000 and only a small number of prototype systems were ever constructed. This also effectively marked the end of the road for the transputer, but by no means its influence.

IMS C004 programmable link switch on an IMS B008 ISA bus development board.

INMOS additionally provided support chips and most systems had at least a C011 or C012 link adapter, which enabled interfacing to an 8-bit data bus. While later development boards and more advanced systems might feature one or more C004 transparent programmable link switches, which provided a full crossbar switch between 32 transputer link inputs and outputs. Thereby allowing dynamic network reconfiguration, albeit sometimes with a notable performance overhead.

Applications

The transputer found no shortage of applications and here we’ll take a look at just a few of these.

HPC & Scientific

It may come as no surprise to learn that the transputer proved popular in high-performance computing (HPC) and scientific applications, thanks to the architecture making it relatively easy to not only construct massively parallel systems but scale them as demand grows. In addition to which the transputer was a hotbed for research and development in parallel computing areas such as network topologies, software and algorithms.

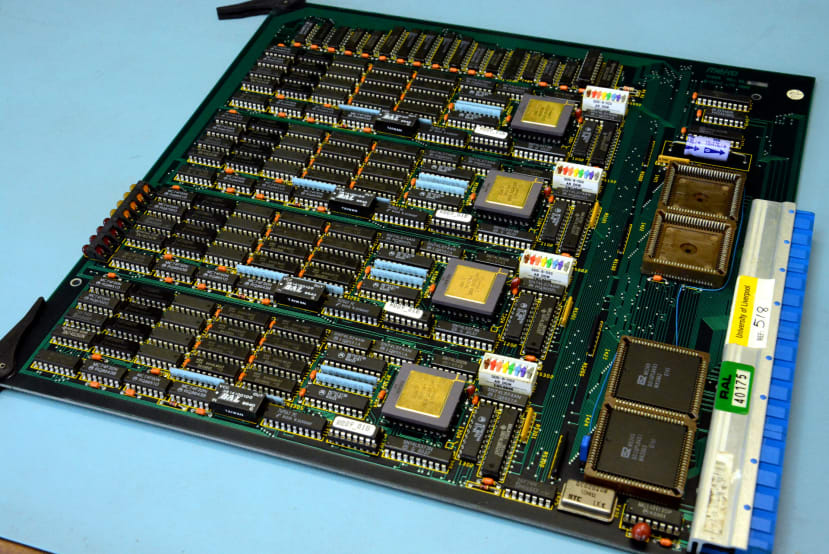

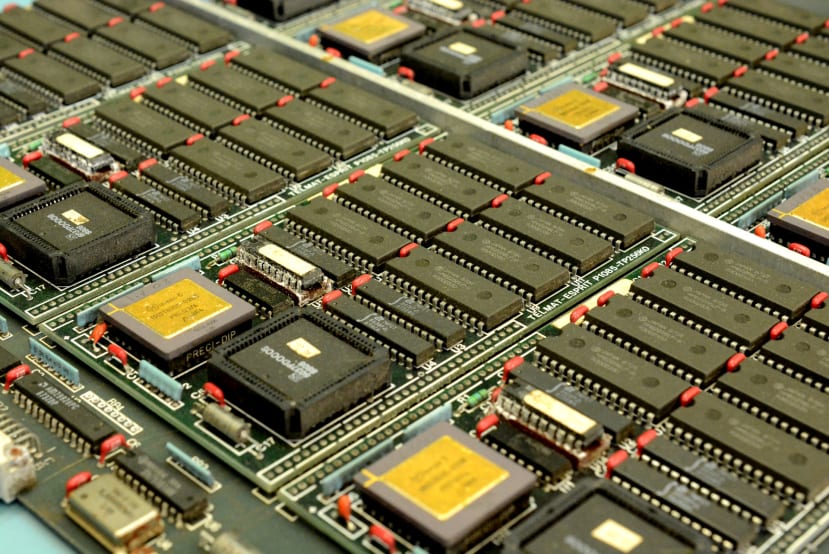

Above can be seen a board from a massively parallel Meiko Computing Surface, which features four T800 transputers plus external memory. Multiple such boards — along with others providing different types of I/O — were fitted into a cabinet which was sufficiently small to be used deskside. Alternatively, many cabinets could be installed in a data centre and interconnected, to create a much more powerful system which may be logically partitioned and used as a shared resource.

CPU board from an ESPRIT Supernode.

The transputer enabled a great deal of original R&D and a notable mention must go to the Supernode project, which was under Europe’s ESPRIT programme in the mid-late 1980s. The objective of which “was to develop a high performance, multiprocessor, prototype computer with a flexible architecture, suitable for a wide range of scientific and engineering problems.” The project was deemed a success having developed a machine which cost tens of thousands of pounds instead of millions, as was typical for a supercomputer. Leading to a follow-on Supernode II project, which aimed to provide the software infrastructure necessary to exploit such platforms.

Supernode partners in the UK included Thorn-EMI, the Royal Signals and Radar Establishment (RSRE), and the University of Liverpool. The project resulted in Parsys Ltd being launched as a UK spin-off in 1988, with Thorn-EMI as a major stakeholder. Parsys went on to develop a range of transputer-based systems and reached £1 million in sales by 1989. Commercial opportunities were meanwhile simultaneously being developed by French partner Telmat, who produced the hardware for the original Supernode project. With both companies developing associated software offerings, adopting somewhat different strategies and seemingly enjoying a good working relationship.

Commercial

A Parsytec Xplorer.

The transputer also enjoyed reasonable success in commercial applications ranging from industrial, through to medical and consumer electronics.

Parsytec produced a range of transputer-based systems, such as the eminently compact Xplorer, which was used together with a Sun workstation as a front-end/development system. This offered a solution for more sophisticated commercial users, with an upgrade path to the GigaCluster supercomputer which could be scaled up to 16,384 transputers.

Xplorer connections, including four transputer links, for expansion and workstation connection.

In addition, Parsytec offered industrial systems for real-time control and signal processing, where transputer features such as the MCU-like nature, hardware scheduling, and distributed parallel architecture with integrated communications and inherent scalability, offered significant benefits.

A transputer-based PCMCIA ISDN adapter.

Consumer electronics company, AVM, produced a range of transputer-based ISDN controllers in ISA, PCMCIA and PCI bus versions. These proved particularly popular and can still be found from time to time on a popular auction site today, with a number of websites detailing how to modify these for use as a general-purpose transputer development system.

Other notable applications include use in test equipment, medical imaging and radar.

Software

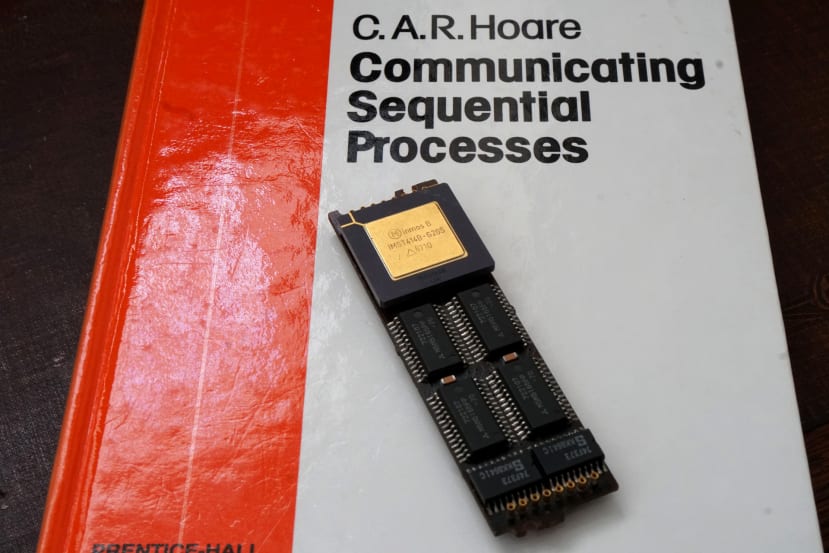

The Occam concurrent programming language was developed for the transputer by David May and others at INMOS. This in turn builds on the communicating sequential processes (CSP) formal language from Tony Hoare, who was also an advisor to INMOS. In recognition of the success of the collaboration between INMOS and Oxford Computing Laboratory, they were jointly presented with the Queen’s Award for Technological Achievement in 1990.

The Occam programming language enables communication between processes via named channels, in which one process outputs to a channel while the other inputs.

Whereas sequential processing is implicit in most other programming languages, in Occam expressions to be evaluated sequentially are placed within a SEQ construct. Expressions to be evaluated in parallel meanwhile are placed within an PAR construct.

Occam aims to simplify the writing of concurrent programming languages by reducing the burden of synchronisation between the different parts of a program; a message will only be sent when both sender and receiver are ready.

PAR

SEQ

... knit body

... output body

SEQ

... knit right sleeve

... output right sleeve

... knit left sleeve

... output left sleeve

SEQ

... knit neck

... output neck

SEQ

PAR

... input body

SEQ

... input right sleeve

... input left sleeve

... input neck

... sew sweaterThe above example, from A Tutorial Introduction to Occam programming, shows how a sweater might be concurrently knitted, with “…” lines simply describing the parts doing the actual work. Thereby illustrating how programs may be structured using SEQ and PAR to ensure that tasks which can be executed in parallel are, while those which must take place in sequence do so.

INMOS and partners provided an extensive collection of software tools and libraries, such as for graphics programming, along with C and FORTRAN compilers, in addition to Occam. Development was frequently done using a PC host, with an iserver program used to boot transputers and provide I/O services. Other host platforms were also supported, with INMOS and third parties offering solutions for Sun, DEC VAX and VMEbus platforms, for example.

In addition to which INMOS provided comprehensive documentation in the form of databooks, instruction set manuals and application notes etc. With no shortage of other textbooks available on topics ranging from Occam basics, to use in advanced applications such as robotics.

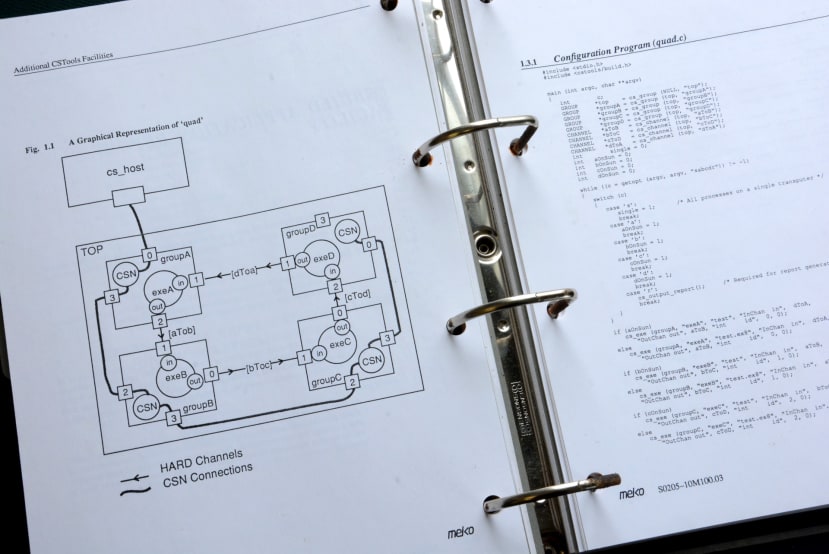

System vendors typically had their own offerings also, such as supercomputer company Meiko with their Computing Surface Tools (CS Tools) platform. Amongst other things, this simplified transferring messages between transputers — and later on between other types of processor also — provided a run time executive, graphics library, compilers, and tools for managing Computing Surface resources in a multi-user environment.

Legacy

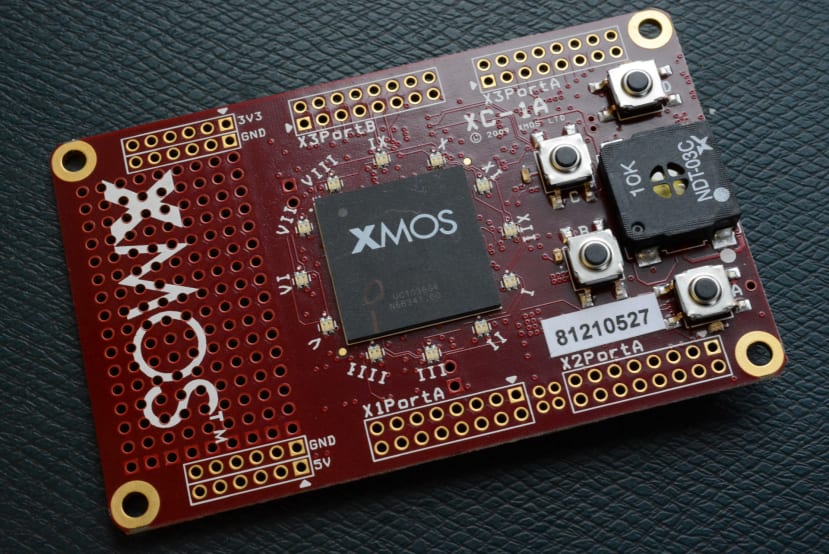

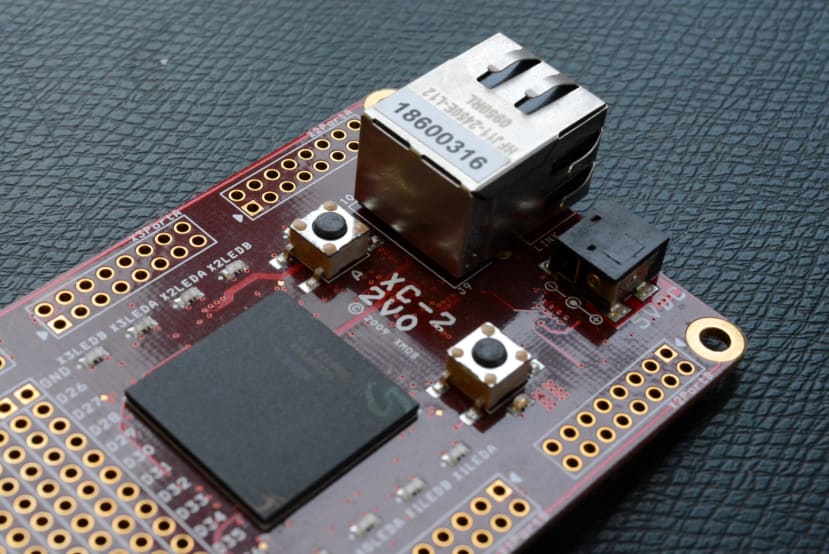

An early XMOS development board.

Perhaps the most visible legacy today comes in the form of devices from semiconductor company, XMOS, which was named in loose reference to INMOS and co-founded by Professor David May, lead architect of the transputer and designer of the Occam programming language. Their xCORE devices are available with 1 to 4 physical processor cores or “tiles”, which are partitioned into up to 32 logical processor cores. Logical cores share processing resources and memory, but each have separate register files and get a guaranteed slice of processing power.

XMOS development board with Ethernet.

The xCORE architecture makes it possible to implement interfaces such as USB, I2S and Ethernet purely in software, thanks to its real-time, deterministic nature. With XMOS providing a large collection of software libraries for implementing such standards, along with other example codes. Typical applications include professional and consumer audio, where their devices have been integrated into products from numerous well-known brands.

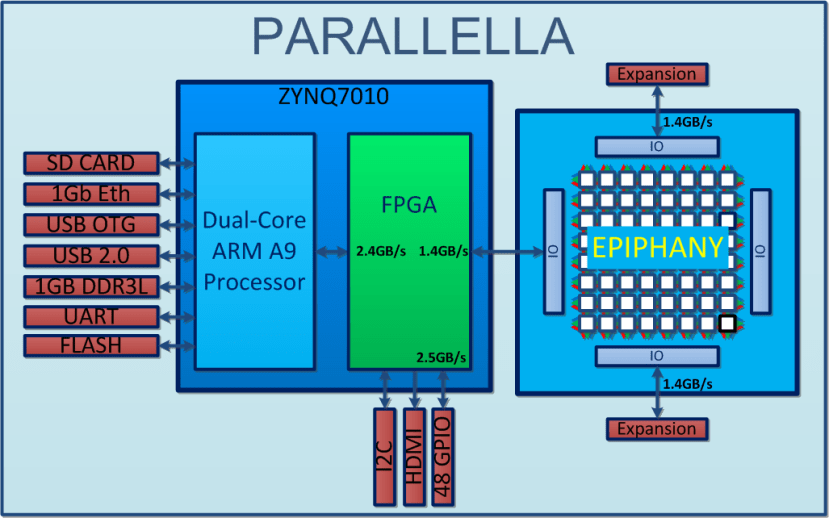

Parallella block diagram.

The move from sequential to parallel programming requires a change in mindset and remains one of the biggest hurdles to the widespread adoption of parallel computing. Meanwhile, parallel computing becomes ever more important across many application domains. With this in mind the Parallella project set about creating a low-cost board that integrated a Zynq device (Arm CPU + FPGA) running Linux, together with a 16-core Epiphany chip. Along with processor cores, the Epiphany integrates a Network-on-Chip plus eLinks for chip-to-chip links. The latter are used to interface with the host processor via FPGA, with an additional two eLinks brought out to expansion connectors on the Parallella board, enabling networks spanning multiple boards to be constructed.

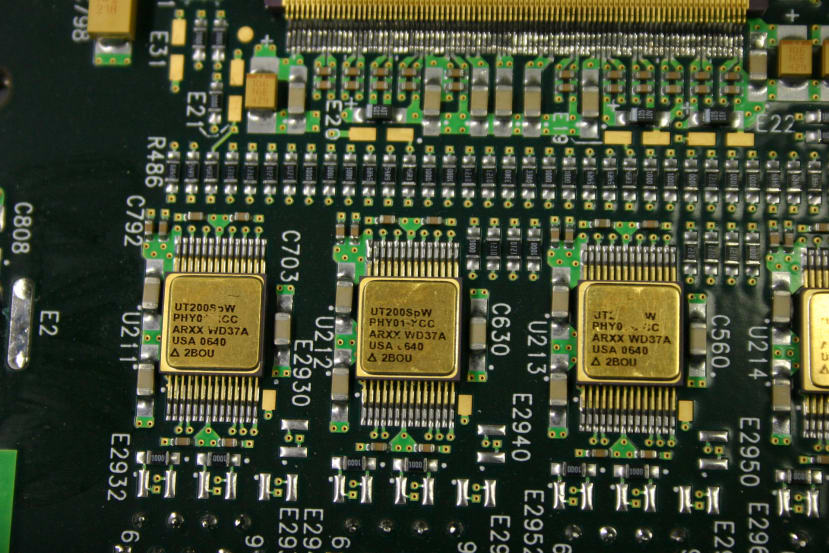

SpaceWire PHY chips in the James Webb Telescope, NASA.

SpaceWire is a spacecraft communication network that is based in part on IEEE1355, which in turn was derived from the asynchronous serial networks developed for the T9000 transputer. SpaceWire network nodes are connected by low-latency, point-to-point serial links, with packet-switching and wormhole routing. The communication network is used by ESA, NASA and many others, with applications including the James Webb Space Telescope (JWST).

Wrapping up

The Picoputer from Andrew Menadue.

The transputer may have come to the end of the road in the 1990s, but the ideas that it embodied live on, not just in the aforementioned technologies, but in others also; while disappointing — or some might say a tragedy even — that the processor architecture is not alive and well today, the contribution made to the field of computing is significant and the transputer continues to inspire.

Finally, there is also a small, but dedicated group of enthusiasts still building and experimenting with transputer technology, via original hardware and new designs using old transputer chips, and also via emulation. A fun example of the latter being the “Picoputer”, which uses a Raspberry Pi Pico (212-2162) to emulate a transputer in software, with the RP2040 programmable IO (PIO) used to implement transputer os-links which are actually capable of booting the emulated processor.

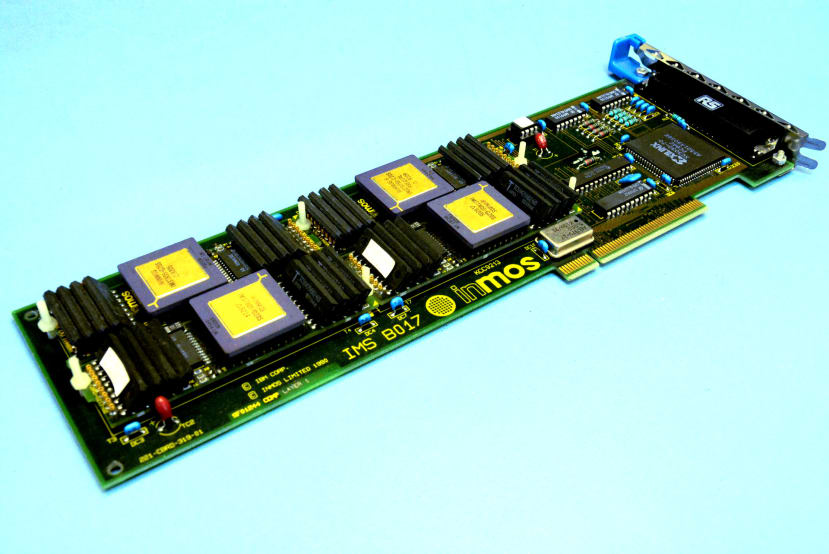

Top image: an MCA bus development board for use with IBM PS/2 computers, fitted with four 20MHz T805 TRAMs.