Novel Mechanical Braille Display with OCR implementation

Follow projectHow do you feel about this article? Help us to provide better content for you.

Thank you! Your feedback has been received.

There was a problem submitting your feedback, please try again later.

What do you think of this article?

This project aims to counter falling braille literacy in the United Kingdom through the implementation of a novel, inexpensive, mechanical braille display. Optical character recognition is used in conjunction to increase its real-world integration.

This project aims to counter falling braille literacy in the United Kingdom through the implementation of a novel, inexpensive, mechanical braille display. Optical character recognition is used in conjunction to increase its real-world integration.

Parts list

| Qty | Product | Part number | |

|---|---|---|---|

| 16 | Micro-vibration motor rated at 14000rpm. | MC02743 | |

| 4 | Microchip PIC16F753-I/P, 8bit PIC Microcontroller, PIC16F, 20MHz, 2k words Flash, 14-Pin PDIP | 803-2336 | |

| 2 | Solentec Limited Linear Solenoid, 6 V dc, 30 x 14 x 16 mm | 905-9953 | |

| 16 | NPN Phototransistor 850nm 40deg. 5mm | 902-6654 | |

| 16 | SFH 4550 Osram Opto, 860nm IR LED, 5mm (T-1 3/4) Through Hole package | 912-8589 | |

Mechanical braille displays are fundamental to countering the falling braille literacy rates in the United Kingdom. Comercial displays typically cost over £1000 due to their use of piezoelectric actuators rendering the displays largely void for students or those from disadvantaged communities. To reduce this cost, alternative methods of actuation must be employed.

Mechanical Design

The fundamental issue in improving braille displays comes from the innate dimensions of the characters (left) as well as the required refresh rate. Each character consists of six pins that are either flush or raised above the reading surface in a unique combination. For a display of eight characters, 48 individual actuation's must take place within 0.1 seconds over an area of 300mm2. Traditional actuators are inexpensive and relatively large compared to a character. To counter this, the required number of actuation's are reduced through an asymmetric camshaft.

The image below shows the proposed method. By facilitating all the possible states of three pins within the orientation of a shaft the required per-character actuation is reduced to two. A unique combination of bosses occurs at every 45°. Thus, for a single character, two actuators are required. These are driven with MC02743 micro-vibration motors at a 30% duty ratio, achieving a 90Hz rotation speed.

The complete system can be seen above. Two rows of opposing camshafts are orientated into the correct position before being raised into the braille pins by the pull action solenoids. The braille pins are spring returned, using the casing as a stop.

The orientation has a tolerance of ±5° before the height of the pins falls outside the Centre of Braille Innovation (CBI) requirements. This precision is difficult to achieve without the employment of more bulky control mechanisms such as a Gray encoder. A securing pin, driven with a torsional spring, applies a constant upward force into the concave section of the camshafts. This increases the tolerance to ±22.5°.

The securing pin is also employed for control. At the apex of its descent, the light from an infrared LED is occluded causing a high to low drop in the control circuit.

Hardware Design

The hardware is structured to allow scaling of the device. A master controller performs the optical character recognition, translation and raising of the system. It has a fixed number of peripherals, requiring the slave microcontrollers to transmit position commands to slaves further down the line. These microcontrollers each drive and control four DC motors (two characters) creating a motor block. Two feedback bits are used; the 'stop bit' specifies if a block has received its positional commands and the 'end bit' indicates if all motors within that block are correctly orientated. The 'end bit' does not become true until it receives the same from the connected motor block, meaning the 'end bit' input to the microcontroller indicates that all characters are correctly orientated. The final block has both the 'stop' and 'end' inputs connected to the positive power supply. This results in it 'thinking' it is connected to a fully defined motor block and acting as the final block in the chain without any unique programming. The master controller requires no knowledge of the number of connected characters as it will continue to send positional commands until the 'feedback bit' is high. This easy scaling is a benefit due to the Centre of Braille Innovation's (CBI) push for larger displays.

A video of the working communication process can be seen below.

Software Design

The aforementioned sections indicate how positional commands can be represented mechanically as braille characters. For the complete image to braille process, two pieces of software have to be implemented:

- An optical character recognition (OCR) algorithm.

- A text to unified English Braille (UEB) code translator.

Initially, the common practice MSER algorithm was used for text extraction. This resulted in a considerable number of false positives due to a static culling system and failed to have an intrinsic sense of the text. The outcome can be seen below with different coloured bounding boxes showing the detected regions. The size of the boxes reflects the standard deviation of the region.

The scenario in which the device will be used is a top-down view of a semi-regular piece of text. The MSER algorithm is a much more general approach to finding areas of interest within an image and is needlessly complex. Instead, lines of text are explicitly searched for using a Harris corner detector across multiple thresholds of light intensity. The weighted corner image can be seen below.

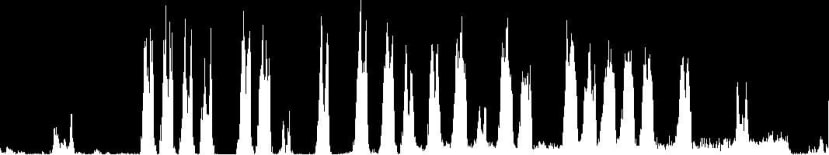

Summing the corners column-wise results in clear peaks at the centre of the lines. By finding these peaks as well as troughs in the corner sum, a line can be defined as two troughs straddling a single peak.

A constrained line can be seen below. To prepare it for translation the letters within it need to be separated and ordered. To do this a local mean threshold is applied, followed by a union-find algorithm before a culling of the connected regions takes place. This culling is based on the height of the line, making it resistant to changes in font size. A post-process of two fills and an erosion takes place to refine the found letters. The algorithm has a considerably lower chance of detecting false positives.

The translation of the found characters is performed via a neural network. 1280 input neurons are used for each character image. These are given from a normalised 16 by 16 image of the character and are:

- The horizontal Sobel derivative.

- The vertical Sobel derivative.

- The local mean image.

- The distance array.

- A discrete cosine transform.

The network was trained on the University of California, Irvine machine learning repository. The translation for the line above can be seen below.

The lack of data and time during the project resulted in a low level of accuracy. The open-source algorithm Tesseract was employed for the final prototype.

Conclusion

This project illustrated the practicality of a novel mechanical braille display as well as its integration with OCR. The hardware and software components could be constructed from home thanks to the RS Grassroots fund. Unfortunately, due to Covid-19 restrictions, the mechanical prototype could not be made.

Overall, the project cost £155, an 85% improvement over commercial alternatives. This price reduction was the key focus of the project and, with future work, a cheaper display could be released commercially. The project did not aim to be the final step in this solution but instead act as proof of alternative approaches.