Intel Joule plus Intel RealSense provide instant depth sensing and more

Follow articleHow do you feel about this article? Help us to provide better content for you.

Thank you! Your feedback has been received.

There was a problem submitting your feedback, please try again later.

What do you think of this article?

Accelerating the development of powerful, perceptual computing applications.

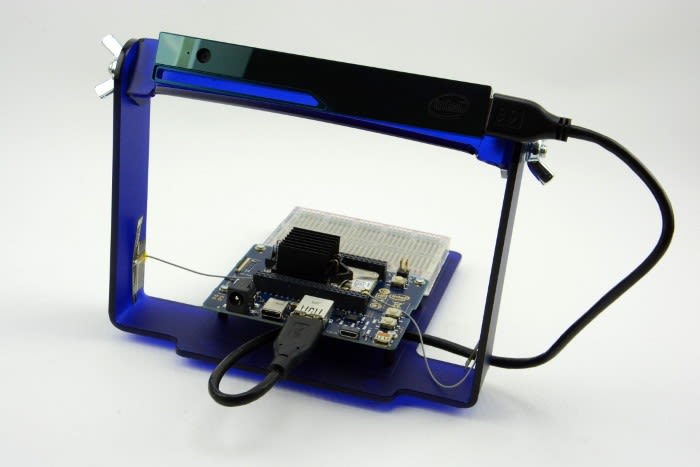

In a previous post I took a first look at the Intel Joule 570x Developer Kit comprised of the impressively tiny and eminently powerful Joule 570x module, companion Breakout Board and reference Linux O/S. In this post an Intel RealSense R200 camera is added to the mix, along with the supporting library and examples, to see what can be achieved with this combination.

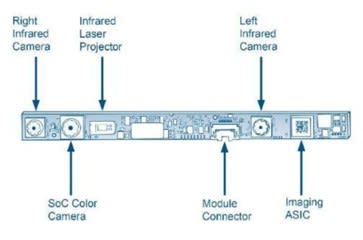

Intel RealSense R200

The Intel RealSense R200 is a lightweight, USB 3.0 peripheral with an RGB sensor, two IR sensors, IR laser projector and an ASIC for onboard processing. It is a longer range depth/3D camera development kit for use with tablet and multifunction devices. It is not targeted at end users and rather instead as a tool for engineers to use in creating new applications and hardware platforms.

It’s another impressive little device from Intel and to quote from the documentation:

“The R200 is an active stereo camera with a 70mm baseline. Indoors, the R200 uses a class-1 laser device to project additional texture into a scene for better stereo performance. The R200 works in disparity space and has a maximum search range of 63 pixels horizontally, the result of which is a 72cm minimum depth distance at the nominal 628x468 resolution. At 320x240, the minimum depth distance reduces to 32cm. The laser texture from multiple R200 devices produces constructive interference, resulting in the feature that R200s can be collocated in the same environment. The dual IR cameras are global shutter, while 1080p RGB imager is rolling shutter. An internal clock triggers all 3 image sensors as a group and this library provides matched frame sets.”

Graphical desktop

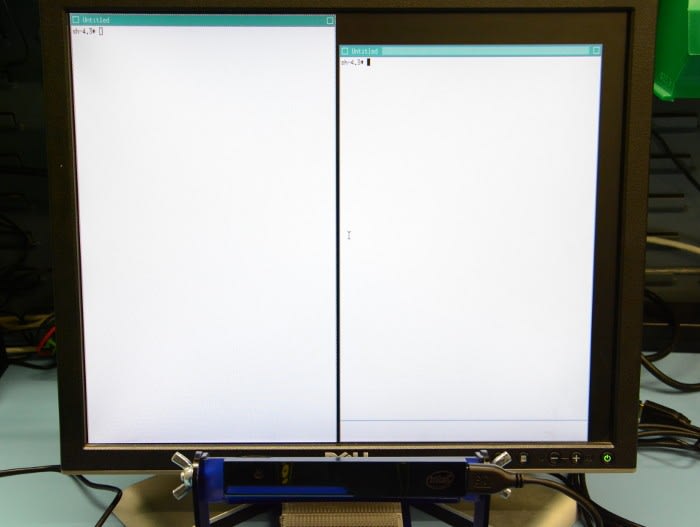

X Windows with the twm window manager

Previously, I initially accessed the Joule module via the breakout board’s convenient onboard USB UART, before configuring the wireless network and then accessing it over SSH. However, the XT version of the reference Ostro Linux distribution that had been written out to the Micro SD card bundles an X server and even desktop software to go with this.

If we type:

startx

The X Windows server starts up and we simply get a basic session with the twm window manager, which has a certain retro appeal and may even be favoured by the more ascetic programmer, but clearly lacks the sort of features that we’ve come to expect with a modern desktop. This session can be killed by typing pkill X into a terminal window.

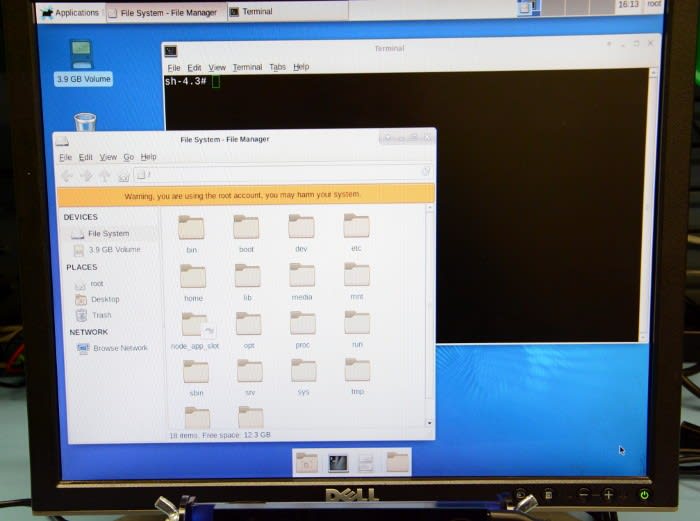

To get a more familiar modern desktop environment we just have to type:

startxfce4

Following which we have a file manager and other useful tools available.

Building librealsense

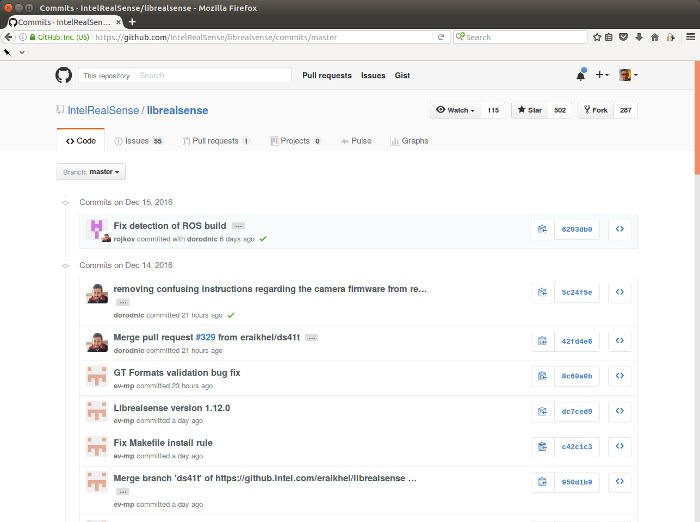

Recent GitHub commits for librealsense

Intel state that the Joule does ship with support for RealSense cameras and if we take a look in /usr/lib, sure enough there is a support library, librealsense.so. However, at the time of writing the current Reference Linux image is v1.0 and this dates from earlier this year, whereas the librealsense open source project on GitHub appears to be getting updated frequently.

One thing to note is that the Reference Linux O/S does not include a package manager and while this might at first seem odd or backwards even, it makes perfect sense when you consider that it is created using the Yocto project tools, which make it easy to bake custom Linux images for mass market devices that contain the required applications and libraries etc. and only those required.

As it happens the provided Linux image comes complete with all the tools and software dependencies we will need to clone and build a new librealsense from source.

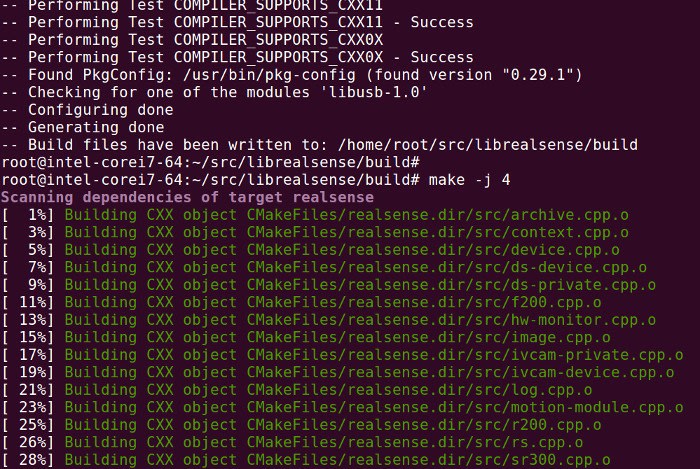

To start with, we need to make sure the camera is not plugged in and following which we can get the latest sources, build and install.

# git clone https://github.com/IntelRealSense/librealsense.git

# cd librealsense

# mkdir build

# cd build

# cmake .. -DBUILD_EXAMPLES:BOOL=true

# make && sudo make install

Two additional steps are required to configure the USB subsystem via udev, so that the RealSense cameras are recognised when plugged in and configured appropriately.

# cp config/99-realsense-libusb.rules /etc/udev/rules.d/

# udevadm control --reload-rules && udevadm trigger

When the camera is plugged in we should now see messages indicating that it has been detected being printed out to the console.

[ 4923.102810] usb 2-2: new SuperSpeed USB device number 3 using xhci_hcd

[ 4923.124075] uvcvideo: Found UVC 1.10 device Intel RealSense 3D Camera R200 (8086:0a80)

Guides for installing librealsense on Linux, Windows and Mac OS X are available on GitHub, but note that when using the Reference Linux it is not necessary to update or patch the kernel, since support is already built in to the one provide with the image.

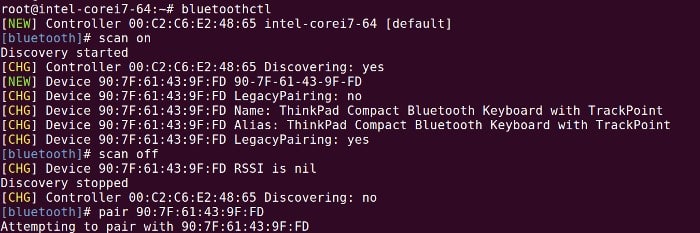

Bluetooth keyboard

Earlier on we used a USB wireless keyboard and mouse, but since we’re now using the USB port to connect the RealSense camera and need a keyboard and pointing device to control the desktop, it was decided to use a Bluetooth keyboard with trackpoint. Pairing Bluetooth devices is simple enough. An alternative would have been to use a USB hub with the keyboard, mouse and SR200.

Running the examples

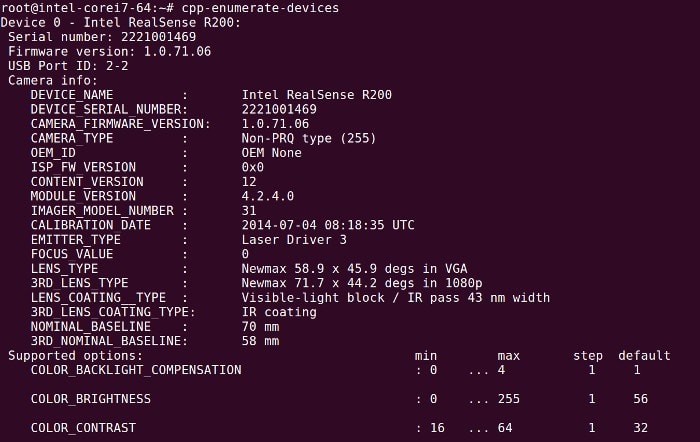

Part of the output from the librealsense enumerate devices program

Since the library gets installed to /usr/local/lib64 we need to define an environment variable so that it will be picked up by the linker:

# export LD_LIBRARY_PATH=/usr/local/lib64

If this is done before X Windows is started it means that any terminal sessions opened inside Xfce will have the environment variable set.

The examples get installed to /usr/loca/bin and all start with cpp-*.

cpp-headless

Generated file: cpp-headless-COLOR

This simple example captures 30 frames and writes the last to disk, resulting in 4 files being created when it is run. Above can be seen the image from the colour camera.

Generated file: cpp-headless-INFRARED

The next is from the first IR camera.

Generated file: cpp-headless-INFRARED2

The third is from the second IR camera.

Generated file: cpp-headless-DEPTH

And the final image has the computed depth.

cpp-tutorial-1-depth

The previous example was named “headless” as it does not require a monitor to be attached. However, neither does this example and it can equally be run via an SSH session or UART connection, since depth is represented via use of ASCII characters.

cpp-pointcloud

This point cloud example only displays objects which are within a certain depth.

cpp-stride

This example is a low latency, multi-threaded demo with callbacks and displays video from the RGB and two IR camera, together with a depth composition.

There are other examples, but rather than walk through everyone single one of them, I’ll leave at least a few surprises!

Conclusion

Getting the Intel Joule up and running with the RealSense R200 took no time at all and the examples ran without a hitch, providing virtually instant hands-on experience with the hardware platform capabilities. What’s interesting also is that all the examples weighed in at between only 100-200 lines of code, which at the thicker end includes a GUI window and multiple video streams.

When using this hardware combination it’s all too easy to forget that the impossibly tiny Joule module is doing all the heavy lifting — and an admirable job it does too, delivering truly fantastic performance. Paired with the Intel RealSense R200, this provides formidable perceptual computing capabilities that could be used to develop next generation applications for IoT, augmented reality and much more.

Comments