Embedded Compute Acceleration with NVIDIA Jetson

Follow articleHow do you feel about this article? Help us to provide better content for you.

Thank you! Your feedback has been received.

There was a problem submitting your feedback, please try again later.

What do you think of this article?

Compact compute powerhouse offers potential in applications beyond just AI.

NVIDIA Jetson integrates a general purpose Arm processor together with a sophisticated graphics processing unit (GPU), in an eminently compact solution which provides blistering performance for applications which are able to take advantage of highly parallel compute. Of course, that’s not every application, but it is by no means limited to AI and also includes things such as digital signal processing — for audio, video, wireless and more — cryptography and scientific computing.

In this article, we take a look at how the CUDA platform and APIs are used to support compute acceleration, before going on to look at some typical applications.

CUDA

A modern GPU is a specialised high-performance device which is not unlike a supercomputer, in that it is a highly parallel multi-core system that accelerates workloads which are managed by a host computer. It achieves this by using a single instruction, multiple data (SIMD) architecture, whereby the same operation is performed on multiple data points simultaneously. Not all workloads are suited to SIMD processing, but those which are — such as computer graphics — benefit from significant increases in both raw compute performance and energy efficiency.

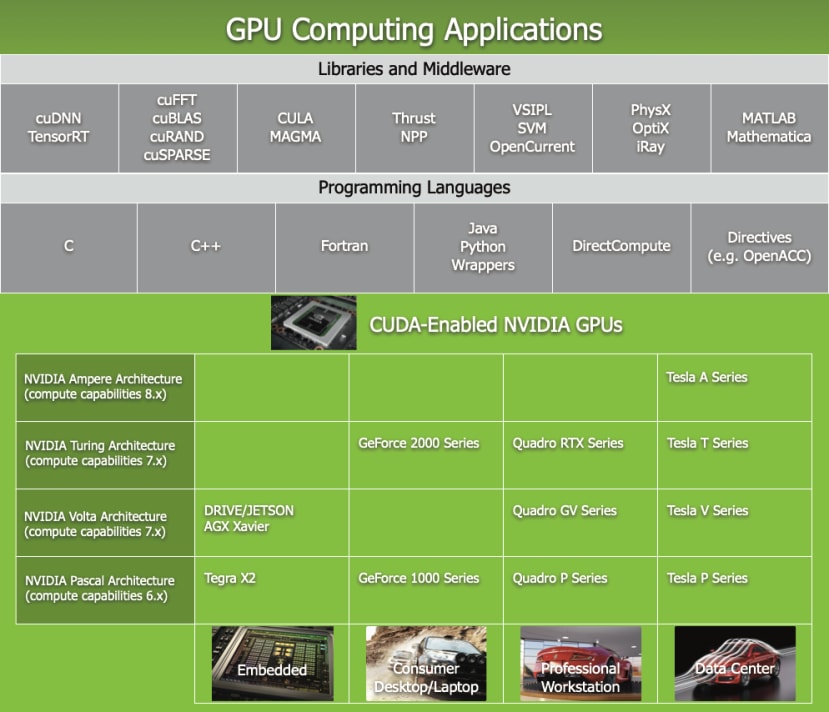

CUDA is a parallel computing platform and application programming interface (API) that allows a GPU to be used for general-purpose computing or “GPGPU”. Meaning that we can take a hardware architecture which was originally designed with graphics in mind and use it to accelerate many other applications. However, it should also be noted that GPUs are now frequently designed with non-graphics processing applications in mind — AI and supercomputing being obvious examples.

Comparisons

It is not uncommon for a system-on-chip (SoC) to feature hardware accelerators for specific tasks, but these tend to be limited to things such as video encoding and decoding, along with particular cryptographic algorithms. Some SoCs integrate SIMD units or vector extensions, but these tend to be much less powerful than a GPU. A digital signal processor (DSP) meanwhile will be designed with specific applications in mind, such as audio processing or wireless communications.

Field-programmable gate arrays (FPGAs) are also used to accelerate key tasks, but require hardware description language (HDL) experience and often make use of custom hardware. Hence FPGA-based compute acceleration tends to be found mainly in certain niche applications.

A GPU has the advantage of being both particularly powerful and software programmable, although the early days of GPGPU presented something of a challenge, due to a lack of software support for use outside of graphics processing. However, the story nowadays is very different indeed, thanks to CUDA and an impressive ecosystem of libraries and SDKs which build on top of this and enable GPUs to be put to use in a wide variety of applications, with increasing ease.

CUDA provides a common platform across NVIDIA devices, ranging from the entry-level Jetson Nano, through to the flagship embedded Jetson AGX Orin (253-9662) , along with typical graphics cards used in personal computers, all the way up to the high-end NVIDIA GPUs used in supercomputers.

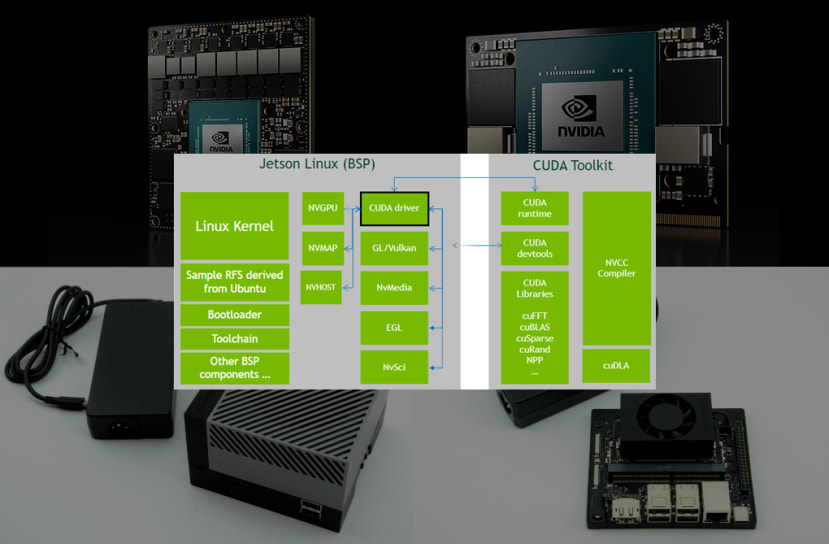

Toolkit

The NVIDIA CUDA Toolkit is described as providing a “development environment for creating high-performance GPU-accelerated applications. With the CUDA Toolkit, you can develop, optimize, and deploy your applications on GPU-accelerated embedded systems, desktop workstations, enterprise data centres, cloud-based platforms and HPC supercomputers. The toolkit includes GPU-accelerated libraries, debugging and optimization tools, a C/C++ compiler, and a runtime library to deploy your application.”

Comprehensive documentation is available and the CUDA C++ Programming Guide provides an excellent introduction to the programming model, showing how its concepts are exposed in C++ by extending the language and allowing the programmer to define C++ functions, called kernels, that, when called, are executed N times in parallel by N different CUDA threads.

int MatrixMultiply(int argc, char **argv,

int block_size, const dim3 &dimsA,

const dim3 &dimsB) {

// Allocate host memory for matrices A and B

unsigned int size_A = dimsA.x * dimsA.y;

unsigned int mem_size_A = sizeof(float) * size_A;

float *h_A;

checkCudaErrors(cudaMallocHost(&h_A, mem_size_A));

unsigned int size_B = dimsB.x * dimsB.y;

unsigned int mem_size_B = sizeof(float) * size_B;

float *h_B;

checkCudaErrors(cudaMallocHost(&h_B, mem_size_B));

cudaStream_t stream;Fragment of Matrix Multiplication example, Copyright (c) 2022, NVIDIA CORPORATION.

Code samples are provided for everything from matrix multiplication and using Message Passing Interface (MPI) with CUDA, through to how with Zero MemCopy kernels can read and write directly to pinned system memory.

CUDA math libraries are also available for Basic Linear Algebra Subprograms (BLAS), Linear Algebra Package (LPACK)-like features, Fast Fourier Transform, random number generation and GPU-accelerated tensor linear algebra, to name but a few.

Building on top of CUDA there are then libraries such as CuPy, a NumPy/SciPy-compatible Array Library for GPU-accelerated Computing with Python, along with no shortage of CUDA accelerated application-specific frameworks.

Applications

Next, we’ll take a look at just a small selection of non-AI applications which can benefit from CUDA accelerated compute.

Signal processing

import cusignal

import cupy as cp

num_pulses = 128

num_samples_per_pulse = 9000

template_length = 1000

x = cp.random.randn(num_pulses, num_samples_per_pulse) + 1j*cp.random.randn(num_pulses, num_samples_per_pulse)

template = cp.random.randn(template_length) + 1j*cp.random.randn(template_length)

pc_out = cusignal.pulse_compression(x, template, normalize=True, window='hamming')cuSignal RADAR pulse compression example.

cuSignal is a GPU-accelerated signal processing library that is based upon and extends the SciPy Signal API. Benefits of which include orders-of-magnitude speed-ups over CPU, zero-copy connection to deep learning frameworks, and optimised streaming. It includes support for convolution, demodulation, filtering, waveform generation, spectrum analysis and more. Up until now part of the RAPIDS ecosystem, the codebase will be moving to become part of CuPy.

While cuSignal provides relatively simple signal processing features, the NVIDIA Aerial SDK provides a framework for building high-performance, software-defined, GPU-accelerated, cloud-native 5G networks. One component of which being cuBB, which features a GPU-accelerated 5G Layer 1 PHY, which it is claimed “delivers unprecedented throughput and efficiency by keeping all physical layer processing within the high-performance GPU memory”.

It should be noted that Aerial SDK is intended for use with server class hardware fitted with high end discrete NVIDIA GPUs such as the GA100. However, it serves as a great example of an advanced CUDA-accelerated signal processing application. Meanwhile, there are opportunities for Jetson devices in embedded use cases, such as communications, spectrum monitoring and RADAR.

Lidar

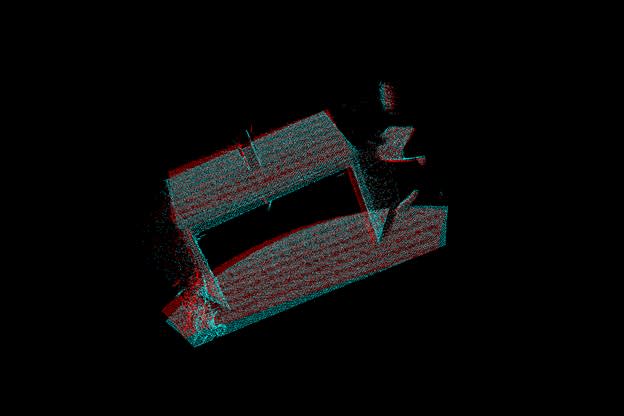

Two sets of point clouds after processing with the ICP algorithm.

Lidar (Light Detection and Ranging) uses laser light to determine the range of objects and through scanning it is possible to map the local environment in 3D, hence it is a key enabler for robotics and autonomous vehicles. One of the challenges however is that processing the resulting 3D point cloud models can be particularly compute-intensive.

Fortunately, such workloads can be CUDA accelerated and NVIDIA provide tools such as the cuPCL libraries for this very purpose. For further details, see the NVIDIA article, Accelerating Lidar for Robotics with NVIDIA CUDA-based PCL.

Java acceleration

Modifying applications in order to make use of GPU-acceleration is all very well, but what if you could just run existing code and take advantage of this? Well, that’s pretty much the holy grail with all types of compute acceleration and if only life were always that simple. However, one attempt at just this for Java workloads is Tornado VM, which provides a JDK plug-in “that allows developers to automatically run Java programs on heterogeneous hardware.”

Heterogeneous hardware employs mixed types of computational units, such as a general-purpose processor and GPU, for example. Tornado VM includes support for CUDA hardware and although Jetson does not feature on their tested devices list, Juan Fumero reports that it works just fine on the original Jetson Nano, demonstrating a 23x speed-up when comparing CUDA-accelerated matrix multiplication with the regular Java sequential implementation.

Wrapping up

While AI provides perhaps the biggest opportunities for GPU acceleration, there are plenty of others and CUDA together with the libraries and frameworks which build on this, are making it increasingly easy to harness the power of highly parallel NVIDIA GPU platforms.

NVIDIA Jetson provides a compact solution for integrating CUDA capabilities into embedded applications, which may make use of a mixture of both AI and non-AI workloads, and where both may benefit from GPU-acceleration. Furthermore, zero-copy memory providing the performance icing on the cake, as data pipelines connecting such components are optimised.

Comments