Simplifying Edge AI with Metropolis Microservices for Jetson

Follow articleHow do you feel about this article? Help us to provide better content for you.

Thank you! Your feedback has been received.

There was a problem submitting your feedback, please try again later.

What do you think of this article?

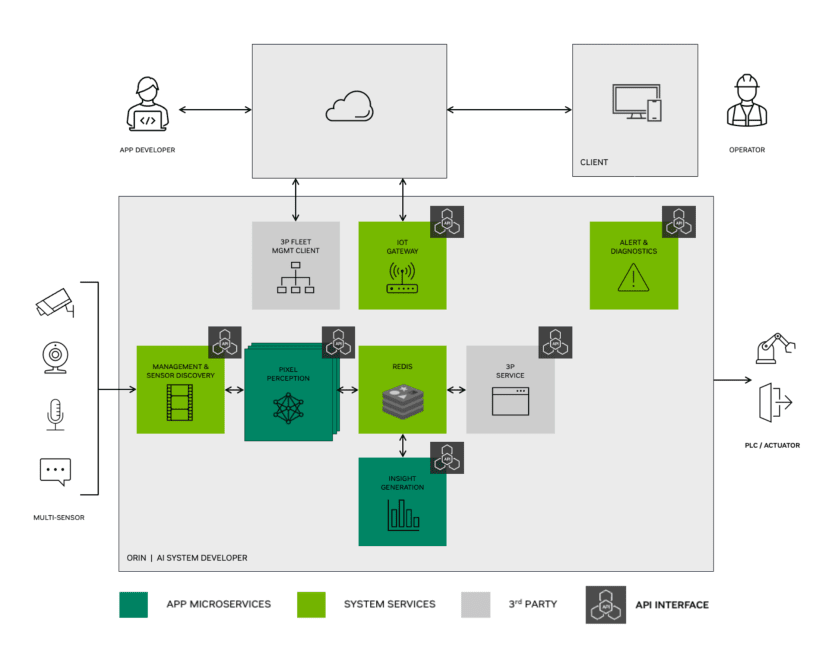

Feature-rich microservices platform makes it easier to develop, deploy and manage edge AI applications on NVIDIA Jetson-embedded GPU-accelerated compute.

Microservice architecture is frequently used to take large, complex applications and break them down into many loosely coupled, fine-grained services. Over the past decade or more it has proved an increasingly popular architectural pattern, which has been fundamental to delivering many of the world’s largest software-as-a-service (SaaS) platforms.

The microservices approach goes beyond simply breaking applications down into modules and libraries, and instead component services are loosely coupled and communicate over the network, typically using agnostic protocols and data formats, such as HTTP and JSON. This has many benefits, including that individual services can be more easily developed by different teams, and using the best fit programming languages, frameworks and database technology etc.

The benefits associated with microservice architecture are not limited to Internet-scale applications which span many servers and data centres, even; the same approach can also be utilised within a single or small number of systems, as well as with large server estates or device fleets.

NVIDIA Metropolis brings microservices to the Jetson embedded compute platform and builds upon their already significant investment in enabling platform technologies, by simplifying the development, deployment and management of edge AI applications. Metropolis leverages existing pieces of the ecosystem — such as NVIDIA’s DeepStream SDK which is used for developing vision AI applications and services — while adding new ones, such as analytics.

At the time of writing Metropolis is supported on Jetson AGX Orin (253-9662) and Jetson NX only.

Software stack

The Metropolis stack runs on top of Jetpack, the NVIDIA SDK which provides the bootloader, Linux kernel, Ubuntu desktop, CUDA for GPU-accelerated compute, and computer vision etc.

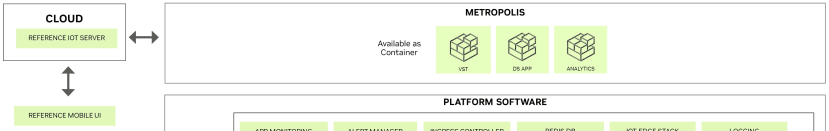

The stack itself is then comprised of three main classes of software: platform services, Metropolis microservices, and applications.

Platform software

Platform software are domain agnostic services supporting commonly needed system functionality including:

- Storage: to provision external storage and allocate to various microservices

- Monitoring: visualize both system and application metrics using dashboards

- Firewall: control ingress and egress network traffic to the system

- IoT gateway: remote, secure access of device APIs to enable rich clients such as mobile apps

The storage service enables auto setup and provisioning of external storage (such as hard disks) attached to a Jetson device. When enabled the service is started at boot by systemd and can be configured to enable things such as disk encryption and usage quotas.

Monitoring components include exporters which expose metrics, metric storage and alerts based on the Prometheus time series database, and visualisation based on the Grafana platform. This enables all manner of system metrics and status to be monitored, such as CPU and GPU utilisation, network throughput, and camera status.

The firewall can be configured to only allow access to the required services and/or impose restrictions based on the source IP address/network, just as with a corporate firewall.

The IoT gateway is part of the Reference Cloud (see below).

Metropolis microservices

The Metropolis Microservices sit above platform services, provide the foundation for building video and sensor processing applications at the edge, and are currently comprised of:

- Video Storage Toolkit: discovery, ingestion and management of camera and sensor streams, video and sensor data storage, video streaming

- DeepStream: Real-time processing of multiple video (camera) and sensor streams through deep learning based inference using pluggable models, tracking and custom CV algorithms

- Analytics: streaming analytics of inference metadata to produce metrics and alerts based on gem capabilities

Microservices are packaged as Docker containers and their functionality is exposed via REST APIs.

REST builds on top of HTTP and is a set of architectural constraints or guidelines, which make APIs faster, more lightweight and easier to develop for than alternative architectures, such as SOAP.

REST characteristics include that client-server communication is stateless, meaning that when a client makes a request via a REST API, the state of the resource is transferred to the client. REST APIs are fundamental to the operation of a great many Internet-scale cloud platforms and are used to enable integration between them — as well as finding use in many enterprise applications also.

With Metropolis, application logic is also containerised and may be deployed bundled together with the microservices which it depends upon, using Docker Compose. Alternatively, Docker containers may be deployed using configuration management / IT automation tooling, such as Ansible. A containerised approach has clear security benefits and allows software to be deployed remotely, optionally using advanced configuration management tooling and workload orchestration platforms.

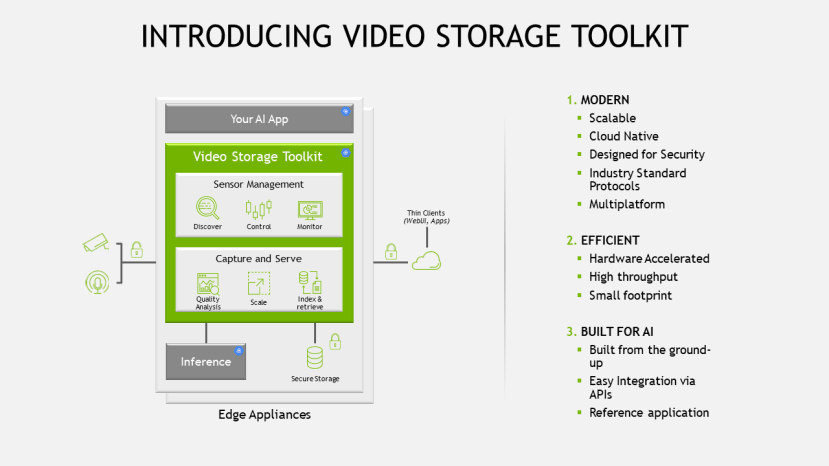

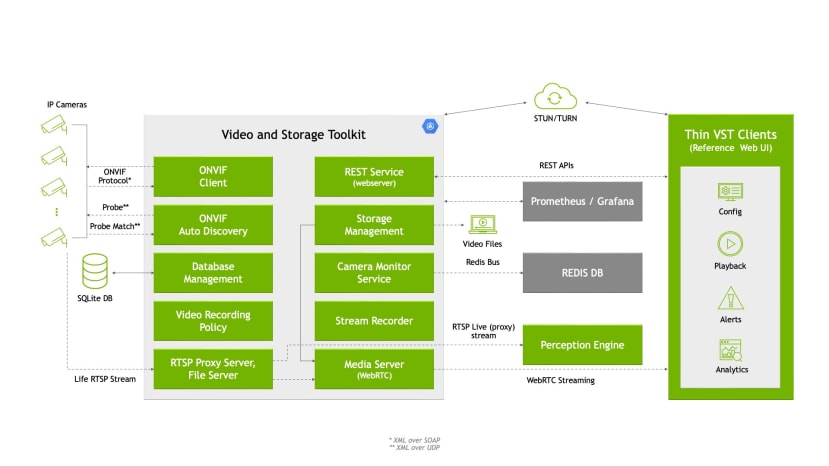

Video Storage Toolkit

Video Storage Toolkit (VST) provides efficient management of cameras and videos on Jetson-based platforms. It is particularly suitable for AI-based video analytics systems by providing hardware-accelerated video decoding, streaming and storage from multiple video sources. VST also includes reference web user-interface for efficient management of your devices.

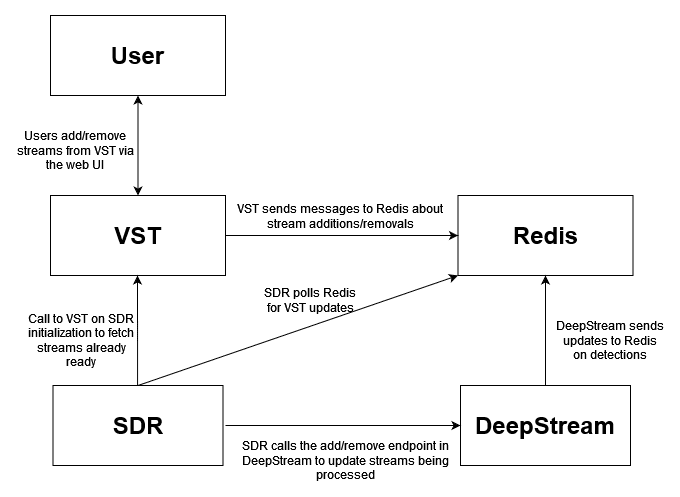

Block diagram of a typical setup.

VST auto-discovers ONVIF-S compliant IP cameras, and allows use of custom IP stream as video source. It then allows for video to be stored, played back at various speeds, or paused at any frame.

DeepStream

Metropolis Microservices DeepStream application provides real-time processing of various camera streams presented by VST based on DeepStream release 6.4. The processing includes object detection using the PeopleNet model from NVIDIA, followed by object tracking using the NvDCF tracker plugin available in DeepStream. The output of the application is metadata based on metropolis schema sent to Redis message bus using msgbroker plugin.

Up to 8 streams per DLA are supported (16 total) on Orin AGX and notably:

- Use of DLA to offload inference from GPU

- Use of PeopleNet 2.6 unpruned model for superior accuracy

- Inference done every alternate frame based on the interval parameter within the primary-gie section

- Use of NvDCF multi-object tracker that supports running on PVA backend for further reducing the load on GPU

- Use of Redis message broker for metadata output

- Use of dynamic stream addition to add streams using the sensor distribution and routing (SDR) microservices.

Metropolis Analytics Microservices

{

"alerts": [],

"counts": [

{

"agg_window": "2 sec",

"histogram": [

{

"end": "2020-01-26T19:16:30.000Z",

"start": "2020-01-26T19:16:29.000Z",

"avg_count": 1,

"min_count": 0,

"max_count": 2

},

.

.

.

{

"end": "2020-01-26T19:17:29.000Z",

"start": "2020-01-26T19:17:28.000Z",

"avg_count": 1.5,

"min_count": 1,

"max_count": 3

}

],

"attributes": [],

"sensorId": "ENDEAVOR",

"type": "fov_count"

}

]

}Example HTTP GET response from the FOV API.

Behaviour Analytics microservice does streaming analytics of vision inference metadata and produces insights in the form of time series metrics and alerts. These time series metrics and alerts can be retrieved using the Analytics Web API microservice. It provides rich situational awareness about the environment monitored by camera. The following modules are provided:

- Field of View (FOV): Counting of people in the camera field of view

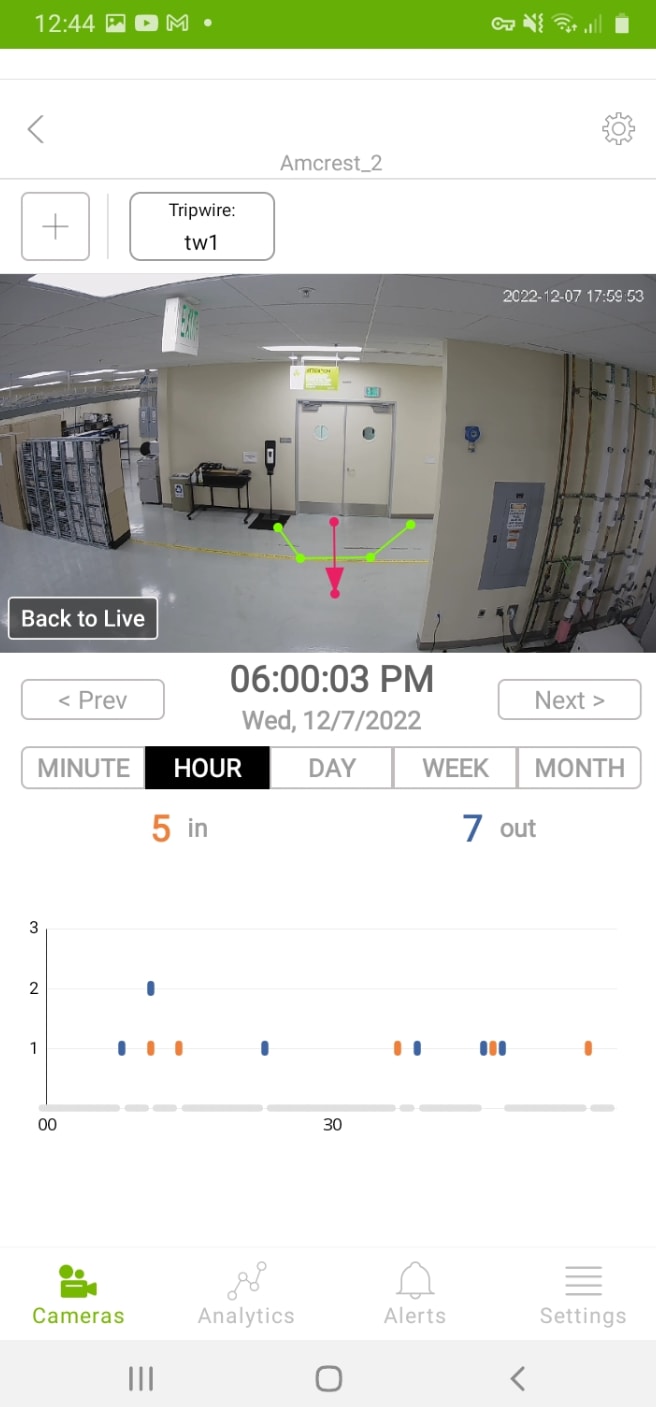

- Tripwire (Tripwire): Counting of people crossing across a configured tripwire line segments

- Region of Interest (ROI): Counting of people in the configured region of interest

Reference cloud

In the above block diagram, we can also see a connection to a “Reference IoT Server” located in the public cloud. This Reference Cloud capability is used to enable remote, secure access of the APIs in an Metropolis Microservices based Jetson system.

- “Always on” TCP connection from device to this cloud through secure provisioning

- API gateway to enable calls to be routed to the appropriate devices based on ID using the TCP connection

- User account support with capability for users to claim devices for access

- Authorization support to control access

These capabilities are likely to prove invaluable during evaluation and prototyping of applications developed using Metropolis. While also serving to demonstrate how a custom cloud component may be developed for use in production applications, enabling remote access to services, along with servicing and maintenance of systems which are deployed in the field. Equally, other cloud services may be integrated in a similar manner, such as those made available via partner APIs and which address specific requirements.

Reference application

The current release includes a reference Android application to visualise how an end-user client can interact with the system. The app showcases various capabilities of Metropolis microservices, including live and recorded video streaming through webRTC, analytics creation and visualization and alerts. The app interacts with the system solely using REST APIs, either accessed directly (for quick start purposes), or through the cloud securely (for maturation).

The AI NVR application uses VST for camera discovery, stream ingestion, storage and streaming. With notable configuration features including an ageing policy to determine when video will be deleted. DeepStream is used for real-time perception using PeopleNet 2.6. While Analytics configuration specifies the spatial and temporal buffers in the implementation of line crossing and region of interest, thereby defining the trade-off between latency and accuracy, which can be set according to the requirements of the particular use case.

Above we can see the Jetson AGX Orin hardware setup, which is comprised of two IP cameras connected to and powered via an Ethernet switch, which is in turn connected to the Orin system. A SATA drive is also connected for NVR storage, along with a keyboard, monitor and mouse.

The AI NVR guide provides detailed setup information, which includes how to install the corresponding Android app, and how to deploy the IoT Gateway service to AWS Cloud.

Final words

NVIDIA Metropolis brings the power and flexibility of a microservices architecture, complete with Docker containerisation and lightweight REST APIs, to edge AI applications running on Jetson. Providing advanced video storage and streaming, plus real-time processing/inference and analytics, thereby making it easier and quicker to develop advanced end-user applications. Which may be targeted to a single system or securely deployed to a fleet of devices, with the option of additionally integrating with services running on public or private cloud infrastructure.