Who’s afraid of Intelligent Machines?

Follow articleHow do you feel about this article? Help us to provide better content for you.

Thank you! Your feedback has been received.

There was a problem submitting your feedback, please try again later.

What do you think of this article?

Fictional robots have always been supremely intelligent and physically much stronger than feeble humans. The reality is that yes, they can be made more robust than us, but as intelligent? Nowhere near. And yet as soon as the word ‘robot’ is mentioned, most people think of the Terminator movies with the evil controlling intelligence of Skynet and humanoid robots looking like chrome-plated human skeletons. Is humanity destined to be enslaved by sentient machines because of our obsession with creating ‘Artificial Intelligence’ (AI)?

Early Mechanical Intelligence – and Fraud

We humans have always been fascinated by ‘Automata’ – machines that perform complex tasks without any apparent human intervention. Into this category come clocks and watches of course: mechanical contrivances that do a simple job well. One of the first machines that appeared to possess real powers of thought was the 18th century chess-playing automaton known as ‘The Turk’. Of course, it was a very much human brain behind its playing skills, but it did inspire Charles Babbage to design his Difference Engines: early machines for performing mathematical calculations. It wasn’t autonomous, but the Turk did have a lot of the movement mechanisms needed to create what was later called a ‘Humanoid Robot’. Artificial intelligence would have to wait for the invention of electronics, because as Babbage found out, mechanical engineering of the time was not up to the task.

Eric the Robot

Fast forward to 1920 when the term ‘Robot’ was first coined in the stage play “Rossum’s Universal Robots” by the Czech writer Karel Čapek. Some years later in 1928, a humanoid robot called Eric was constructed to deliver the opening address at a UK Model Engineering exhibition. Eric could stand up, move his arms and head, and “talk”. A working replica is on display at the London Science Museum.

Again, no machine intelligence but Eric did help generate the popular image of a robot looking like a somewhat sinister man in a medieval suit of armour. Eric’s teeth flashed with 35000 volt sparks as he ‘spoke’ perhaps adding to a feeling of unease among the audience! Although, to be honest, nowadays most audiences would probably react with amusement rather than fear. Eric may be humanoid in form, but he can’t walk and is not realistic enough to fall into the Uncanny Valley.

Three Laws Safe

Isaac Asimov published his Three Laws of Robotics in 1942 as part of a science fiction short story called ‘Runaround’. This and other stories about human interaction with robots and artificial intelligence were later collected together to form the book: I, Robot. It was the first attempt to study the ethics of future AI and introduced the now-famous laws designed to prevent an imagined robot ‘Armageddon’:

- 1st Law: A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- 2nd Law: A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

- 3rd Law: A robot must protect its own existence as long as such protection does not conflict with the First or Second Laws.

A ‘zeroth’ law was added later:

- 0th Law: A robot may not injure humanity, or, by inaction, allow humanity to come to harm.

Asimov was way ahead of his time in predicting the need for rules governing the construction and operation of a machine that could potentially become self-aware and see itself higher than humanity in the ‘food-chain’.

The Present Day

It seems incredible, but nearly 90 years later, real, as opposed to fictional humanoid robots have advanced little in terms of intelligence. The mechanical hardware with its associated low-level control electronics is now pretty sophisticated: check out the developments at Boston Dynamics. Asimov’s laws went unchallenged for decades because they applied to future robots imagined to be both physically and intellectually superior to their human creators. Until recently we had little to fear from the unsophisticated multi-jointed arms welding cars together in a factory because they’re rooted to the spot, only do what their programmers tell them and fences keep human workers safely out of reach. Now we may have a problem: potentially lethal mobile robots may soon be on the factory or warehouse floor and AI in the form of Machine/Deep Learning makes it very difficult to guarantee safe operation. Take a look at this video of Boston content-Dynamics’ Handle warehouse robot in action:

Learning to Kill

Very lifelike humanoid robots may freak you out, but if an AI-powered Handle mistook you for a cardboard box, then it could cause you some serious injury. Is that possible? It’s more than possible: a big problem with Deep Learning is the inadvertent introduction of ‘bias’ into the initial learning process. For example, if it were presented with images of all sorts of boxes tagged ‘correct’, along with pictures of other things like people tagged ‘incorrect’, then the algorithm should learn to recognise boxes only as targets. Here’s a possible snag: if all the boxes in the images are the same brown colour and the real warehouse people all wear matching brown coats, then the inference engine running on the robot looking at real-time images from its camera, may infer from its dataset that all brown objects are boxes…..

Bias is a very real limitation of Deep Learning. It can be overcome, in this case by including plenty of brown-coated staff in the original image set. Very often its not that simple and the number of training images needed may be huge to ensure accurate recognition. You will only find out how good your dataset is when it’s tried out in the real world. Some object recognition software for a driverless car application worked just fine with test images, but failed miserably out on the road. It’s not an exact science – you work with probabilities not certainties – just like human intelligence. The question is: are we prepared to work alongside machines that could make mistakes because, just like us, they learn their skills? They are no longer programmed.

Updating the three laws

Are you scared of robots yet? There have been a number of attempts in recent years to improve upon Asimov’s laws, updating them to be useful to current designers. The UK research councils EPSRC and AHRC held a workshop in 2010 to come up with some Principles of Robotics for design and development engineers. These principles apply to the design and application of ‘real’ robots keeping full responsibility for their actions firmly in the human domain. No blaming an autonomous vehicle’s AI for having learned bad habits and causing a fatal crash for instance. Machine sentience is not considered here (yet).

However, there are others who propose rights for robots. Consider these proposed principles from robotics physicist Mark Tilden:

- A robot must protect its existence at all costs.

- A robot must obtain and maintain access to its own power source.

- A robot must continually search for better power sources.

These principles apply to a sentient, living robot. I do not want to be still around when my toaster demands its freedom from the oppressive environment of my kitchen.

Some lateral thinking

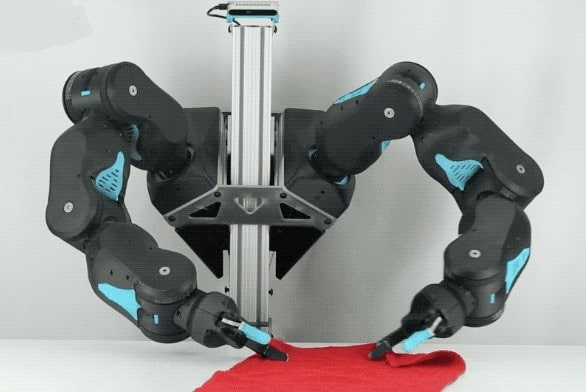

Industry has been rather slow to introduce Cobots to workshops and factory floors. This may be in part due to excessive marketing hype around their usefulness, but also co-worker resistance on safety grounds. UC Berkeley have come up with a solution. They’ve created a Soft Cobot called Blue based on Soft Robot technology. Even small robot arms are strong and capable of injuring a human if they get in the way. Elaborate measures are taken to stop this happening, adding to the cost. The problem lies with the power of the joint motors and the rigidity of the arm necessary to ensure precise movement. But for the most popular tasks assigned to Cobots this precision and strength is not required. Most just pick things up and put them down somewhere else, or maybe pick up a tool when requested and hand it to the human engineer on the opposite side of the workbench. As both these jobs are within the scope of human strength and dexterity, why bother with unnecessary power and precision? Enter Blue: the plastic, rubber-band driven, inherently safe robot helper:

I bet you wouldn’t be afraid of that robot – even if its AI brain did start harbouring thoughts of world domination.

Finally…

Just to emphasize that Terminator-style AI is probably a very long way off, in 2014 IBM invested a great deal of money in their Watson AI computer which had beaten all human competitors to win the US quiz show Jeopardy. They started a project which should have seen Watson able to take over the diagnostic role of medical practitioners. To date there is little to show for all the effort. The input data, including doctor’s notes, patient medical records, etc. seems to be too unstructured and complicated for even Watson to find hidden patterns. Someday perhaps. Maybe when the Next Big Breakthrough comes along to replace Deep Learning.

If you're stuck for something to do, follow my posts on Twitter. I link to interesting articles on new electronics and related technologies, retweeting posts I spot about robots, space exploration and other issues.