Industrial Sensing, Lidar, Radar & Digital Cameras: the Eyes of Autonomous Vehicles

Follow articleHow do you feel about this article? Help us to provide better content for you.

Thank you! Your feedback has been received.

There was a problem submitting your feedback, please try again later.

What do you think of this article?

Automotive electronics have been steadily increasing in quantity and sophistication since the introduction of the first engine management unit and electronic fuel injection. Each new feature requires the processing of data from new types of sensor in real-time.

ADAS and the Sensor Explosion

For example, the catalytic converter used to reduce Carbon Monoxide (CO) emissions from a petrol engine exhaust can only work if the intake fuel/air mixture is accurately metered and the ignition timing is equally precise. A special sensor called an Air Mass Meter measures the quantity of air flowing into the engine; another sensor measures the fuel flow. Emissions Control is a closed-loop system with feedback from an exhaust gas monitoring device called an Oxygen Sensor. The first Electronic Control Units (ECU) to be fitted to cars performed an engine management function, controlling the fuel injection and ignition timing to ensure maximum efficiency and minimum exhaust pollution. ECU reliability was not considered to be a safety issue at this time. Early Anti-lock braking (ABS) used to be entirely mechanical-hydraulic and was designed to be ‘fail-safe’. And it was. Sort of.

Nowadays digital rotation sensors on each wheel provide data to another ECU which is capable of overriding the driver’s footbrake (ABS) and accelerator (Traction Control) inputs. This is an early component of what is now called an Advanced Driver-Assistance System (ADAS), and reliability is very much a safety issue.

Image source: nvidia

Vision Systems – Sensors

Before the DARPA Grand Challenge(s) and Google caused the development of autonomous vehicles to go viral, the sensors for ADAS were fairly simple, sampling rates low and ECU processing well within the capabilities of a fairly weedy microcontroller. All that changed, when it was realised that replacing human sensory input (eyes) and image processing (brain) was going to be a mammoth task. Up to that point, the most sophisticated system for monitoring what was happening outside the car was parking radar, based on ultrasonic transducers! The search is on to develop a vision sensor as good as the human eye. Or so it would seem. In fact, when you lay out the specification for an artificial eye able to provide the quality of data necessary for driver automation, the human eye seems rubbish by comparison. Consider this loose specification for our vision system:

- Must provide 360° horizontal coverage around the vehicle

- Must resolve objects in 3D very close to, and far from, the vehicle

- Must resolve/identify multiple static/moving objects up to maximum range

- Must do all the above in all lighting and weather conditions

- Finally, provide all this data in ‘real-time’.

Now see how well human vision meets these requirements:

- Two eyes provide up to 200° horizontal field of view (FOV) with eye movement

- 3D (binocular) vision exists for 130° of the FOV

- Only central 45 to 50° FOV is ‘high-resolution’ with maximum movement & colour perception

- Outside the central zone, perception falls off rapidly – ‘peripheral vision’

- Automatic iris provides good performance in varying lighting conditions

- Equivalent video ‘frame rate’ good in central zone, bad at limits of peripheral vision

- Each eye has a ‘blind-spot’ where the optic nerve joins the retina

- All the above ignore age, disease and injury related defects of course

The upshot of this is that the human driver’s eyes only provide acceptable vision over a narrow zone looking straight ahead. And then there’s the blind spot. Given the limitations of eyeball ‘wetware’ why don’t we see life as if through a narrow porthole with part of the image missing? The answer lies with the brain: it interpolates or ‘fills in the blanks’ with clever guesswork. But it’s easily fooled.

Artificial Eyes – Digital Video Camera

The DV camera is an obvious candidate as an artificial eye which in many ways offers superior performance to its natural counterpart:

- Maintains high-resolution in pixels and colour across the full width of its field of view

- Maintains constant ‘frame-rate’ across the field of view

- Two cameras provide stereoscopic 3D vision

- It’s a ‘passive’ system, so no co-existence problems with other vehicles’ transmissions

- Maintains performance over time – doesn’t suffer from hay-fever or macular degeneration

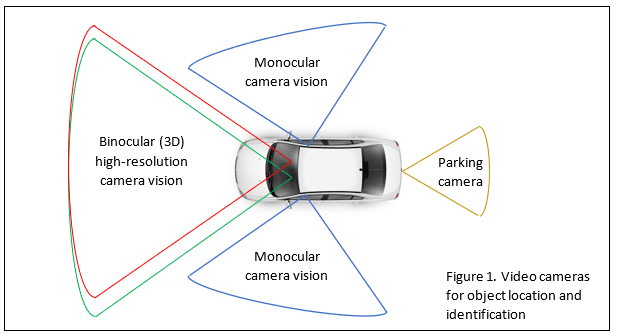

Fig.1 above shows a system suitable for ADAS, providing such functions as forward collision avoidance, lane departure warnings, pedestrian detection, parking assistance and adaptive cruise control. The lack of 360° coverage makes the design unsuitable for Level 4 and 5 autodriving however. Full around-the-car perception is possible, but that takes at least six cameras and an enormous amount of digital processing. Nevertheless, it matches or exceeds the capabilities of human eyes in this situation. Three areas of concern remain though:

- Performance in poor lighting conditions, i.e. at night

- Performance in bad weather. What happens when the lenses get coated with dirt or ice?

- Expensive, ruggedized cameras needed, able to work over a wide temperature range

It’s not just the ability to see traffic ahead that’s impaired; lane markings may be obscured by water and snow, rendering any lane departure warning system unreliable.

Artificial Eyes – Lidar

Light Detection and Ranging or Lidar operates on the same principle as conventional microwave radar. Pulses of laser light are reflected off an object back to a detector and the time-of-flight is measured. The beam is very narrow and the scanning rate sufficiently fast, that accurate 3D representations of the environment around the car can be built up in ‘real-time’. Lidar scores over the DV camera in a number of ways:

- Full 360° 3D coverage is available with a single unit

- Unaffected by light level and has better poor weather performance

- Better distance estimation

- Longer range

- Lidar sensor data requires much less processing than camera video

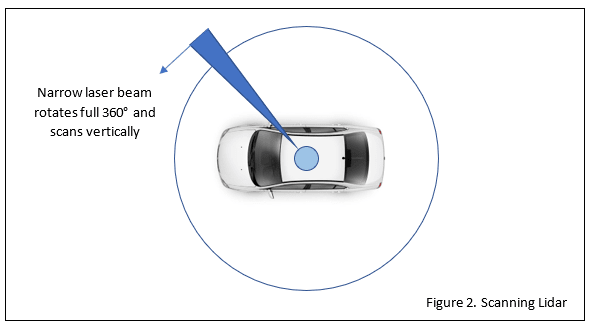

A Lidar scanner mounted on the roof of a car (see Fig.2 above) can, in theory, provide nearly all the information needed for advanced ADAS or autodriving capabilities. The first Google prototypes sported the characteristic dome and some car manufacturers believe that safe operation can be achieved with Lidar alone. But there are downsides:

- Resolution is poorer than the DV camera

- It can’t ‘see’ 2D road markings, so no good for lane departure warnings

- For the same reason, it can’t ‘read’ road signs

- The prominent electro-mechanical hardware has up to now been very expensive

- Laser output power is limited as light wavelengths of 600-1000nm can cause eye damage to other road users. Future units may use the less damaging 1550nm wavelength

The cost of Lidar scanners is coming down as they go solid-state, but this has meant a drop in resolution due to fewer channels being used (perhaps as few as 8 instead of 64). Some are designed to be distributed around the car, each providing partial coverage, and avoiding unsightly bulges on the roof.

Artificial Eyes – Radar

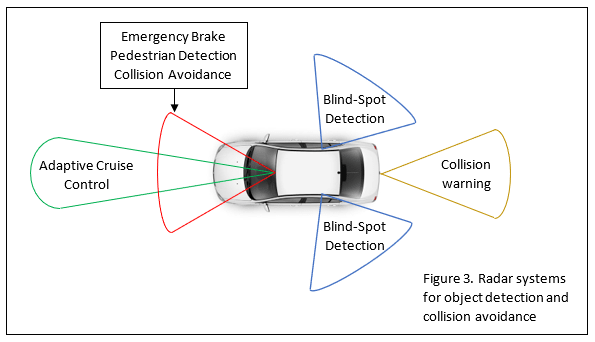

Microwave radar can be used for object detection from short (10m) to long range (100m). It can handle functions such as adaptive cruise control, forward collision avoidance, blind-spot detection, parking assistance and pre-crash alerts (see Fig.3 below). Two frequency bands are in common use: 24-29GHz for short range and 76-77GHz for long range work. The lower band has power restrictions and limited bandwidth because it’s shared with other users. The higher band (which may soon be extended to 81GHz) has much better bandwidth and higher power levels may be used. Higher bandwidth translates to better resolution for both short and long range working and may soon result in the phasing out of 24-29GHz for radar. It’s available in two forms: Simple Pulse Time-of-Flight and Frequency-Modulated Continuous-Wave (FMCW). FMCW radar has a massive amount of redundancy in the transmitted signal enabling the receiver to operate in conditions of appalling RF noise. This means that you can have either greatly extended range in benign conditions, or tolerate massive interference at short range. Not good enough for autodriving on its own, but it does have some plus points:

- Unaffected by light level and has good poor-weather performance

- It’s been around for a long time, so well-developed automotive hardware on the market

- Cheaper SiGe technology is replacing GaAs in new devices

Radar has its uses, but limitations confine it to basic ADAS:

- Radar doesn’t have the resolution for object identification, only detection

- 24-29GHz band radar unable to differentiate between multiple targets reliably

- FMCW radar requires complex signal processing in the receiver

The Brain

Both Lidar and DV cameras require incredibly powerful computers to turn their output signals into a 3D map of the world around the car with static objects identified, and moving ones identified and tracked in real-time. Such processing power is becoming available in single-board formats, small enough and cheap enough to bring Levels 4 and 5 autodriving within reach. For example, the NVIDIA DRIVE Hyperion™ is a reference architecture for NVIDIA’s Level 2+ autonomy solution consisting of a complete sensor suite and AI computing platform, along with the full software stack for autonomous driving, driver monitoring, and visualization. Then there’s the Renesas R-Car H3 SiP module crammed with eight ARM Cortex-A57 and A53 cores with a Cortex-R7 dual lock-step safety core thrown in for good measure. Not enough for Level 5, but it can handle eight cameras and drive the car’s infotainment system – all to ISO 26262 ASIL-B!

Conclusion

The obvious conclusion is that DV cameras, Lidar, Radar and even ultrasonics (Sonar) will all be needed to achieve safe Level 4 and 5 autodriving, at least for the short term. Combining the outputs of all these sensor systems into a form the actual driving software can use will be a major challenge of sensor fusion. No doubt artificial intelligence in the form of Deep Neural Networks will be used extensively, not only for image processing and object identification but as a reservoir of ‘driving experience’ for decision making.

If you're stuck for something to do, follow my posts on Twitter. I link to interesting articles on new electronics and related technologies, retweeting posts I spot about Robots, Artificial Intelligence, Space Exploration and other issues.