Personal AI Trainer - Speech Synthesis and Recognition (Part II)

Follow articleHow do you feel about this article? Help us to provide better content for you.

Thank you! Your feedback has been received.

There was a problem submitting your feedback, please try again later.

What do you think of this article?

Introduction

In a previous article, I described a concept about a personal AI trainer that could generate custom workout plans based on voice-based user input, and then monitor the user's execution of the workout and count their repetitions. In this follow up article, I will go through the first necessary piece of the full project - conversational AI. I will introduce you to three compact deep learning models, used for automatic speech recognition and text-to-speech generation, that can run on a portable computer. In the end, I will show three demo apps with conversational AI. The pre-trained models used in the article are available in the attachments. The source code and instructions on how to get started are available in the GitHub repository.

Automatic Speech Recognition

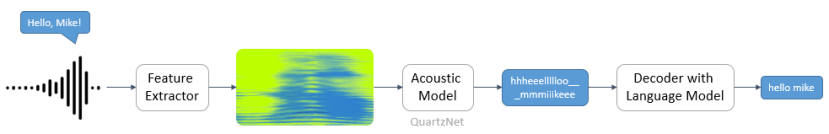

Automatic speech recognition (ASR) refers to the process of converting a raw audio signal of human speech into readable text. Unsurprisingly, as with many other machine intelligence tasks, the state of the art performance is achieved using deep learning. Before diving into implementation details, let's examine how a modern ASR system works.

Feature Extraction

Before doing the actual recognition, most ASR systems convert the raw audio waveform to a spectrogram. A spectrogram shows the evolution of the audio frequency spectrum in time. It is used since it emphasizes the information we are interested in - the human voice and the pronounced phonemes - while suppressing unimportant features such as noise and other variations in the speaker, microphone, etc.

Acoustic Model

The acoustic model is the part in which deep learning comes into action. The acoustic model tries to predict the vocabulary character that corresponds to each timestep in the spectrogram. QuartzNet is a compact neural acoustic model that achieves a near state of the art performance while being relatively small. This means that it is suitable for running on portable devices, such as a Jetson Nano (199-9831) .

Decoder

If we were being greedy and took the vocabulary characters with the highest probability from the acoustic model, we would notice that the output looks like something one would write at 3 AM on a Friday night. Suppose, that the user of our ASR system said "Hello, Mike!"; the acoustic model would output something like "hhheeellllloo___mmmiiikeee". As a human, we would most likely understand the meaning of this, however, it would be hard to write a program that could understand it. The job of the decoder is to take the vocabulary character probabilities, and convert them into a nice looking sentence, such as "hello mike". The decoder often uses a statistical language model to output a high probability sequence of words, given the output of the acoustic model.

Text-to-Speech

A text-to-speech (TTS) system is the exact opposite of ASR - it takes a human-readable piece of text and generates natural-sounding speech. A simplistic view of a modern TTS pipeline would also reveal that it looks much like an inverted ASR pipeline.

Synthesis Network

The synthesis network generates a Mel spectrogram directly from the input text. The details of how these works are way beyond the scope of this article. The curious readers can read these details in the paper of the model that will be used - Tacotron 2.

Vocoder Network

The vocoder network takes the output from the synthesis network (Tacotron 2 in our case) and produces an audio waveform that can be played. SqueezeWave is a lightweight vocoder, based on WaveGlow that is suitable for running on portable devices, such as a Jetson Nano (199-9831) .

Implementation Details

So far we mentioned three models that will be used for ASR and TTS - QuartzNet, Tacotron 2, and SqueezeWave. Training from scratch would take quite a long time. Luckily, there are freely available pre-trained models on NVIDIA NGC and the SqueezeWave GitHub repository. For convenience, these are also provided as an attachment to this article. For portability, the models are provided as ONNX models. However, we will convert these to TensorRT models to optimise them for the system that they will run on and increase their inference performance. The source code and instructions on how to get started are available in the GitHub repository.

Samples

Let's finish off with some samples of the programs that were written using the models above.

The first program is a simple ASR demo that constantly listens to the microphone and waits for the triggering keyword "Mike" to be said. Once this keyword is detected, the program will record a 5-second sample from the microphone and pass it through the ASR pipeline described above. Once complete, the recognised text is printed in the Terminal:

The second program is a simple TTS demo that waits for text-based user input in the terminal and converts it to speech:

The last program combines ASR and TTS with an extremely basic natural language understanding (NLU) system to create a voice assistant:

Conclusion

This article served as the first stepping stone to create a personal AI trainer by building a conversational AI app. In the following article, we will build the next stepping stone - a computer vision system that recognises a human skeleton and counts exercise repetitions. Stay tuned!